AI fakers exposed in tech dev recruitment: postmortem

A full-remote security startup nearly hired a backend engineer who doesn’t exist, after a candidate used an AI filter as an on-screen disguise in video interviews. Learnings for tech companies

Imagine you’re the cofounder of a startup hiring its first few software engineers, and there’s a candidate who smashes the technical interview, and is the only one to complete the full coding interview – doing so with time to spare. Their communication style is a bit unconventional, but this could be explained by language differences, and isn’t a red flag. So, the promising candidate gets a thumbs up, and pretty soon they’re on screen in a non-technical final interview with the other cofounder, via video.

Then things get weird. Your cofounder pings you mid-interview to report that the candidate from Poland speaks no Polish whatsoever, and also that there is something just not right about their appearance on screen. The recruitment of this candidate gets derailed by suspicious details which erode everyone’s confidence in making the hire. Soon afterwards, the same thing happens again with another candidate.

Later, you discover that two imposters hiding behind deepfake avatars almost succeeded in tricking your startup into hiring them. This may sound like the stuff of fiction, but it really did happen to a startup called Vidoc Security, recently. Fortunately, they caught the AI impostors – and the second time it happened they got video evidence.

To find out more about this unsettling, fascinating episode from the intersection of cutting-edge AI and tech recruitment, the Pragmatic Engineer sat down with Vidoc cofounder, Dawid Moczadło. Today, we cover:

The first AI faker. Vidoc Security nearly made an offer to a fake candidate, but their back story raised too many questions.

The second AI faker. The next time a job candidate raised similar suspicions, the team was prepared and confronted the faker during the call – and recorded it.

How to avoid being tricked by AI candidates. Take the risk seriously, have applicants turn off video filters and verify that they do this, record interviews, and get firm proof of identity before any offer.

Foreign state interference? There’s evidence that many AI candidates could be part of a coordinated governmental operation targeting hundreds of western tech businesses. Full-remote workplaces are the most targeted.

Return of in-person final rounds? This looks an obvious consequence of these incidents.

New AI risks for tech businesses. Remote interviews may have to change, while devs also risk introducing security vulnerabilities by accepting AI suggestions without critique.

Hiring funnel. The story began with this job posting for a backend engineer. The startup shares its hiring funnel, giving a sense of how competitive full-remote startup positions are, currently. As context: from 500 applications, two hires have been made – and Vidoc is still actively recruiting for this position.

Since every candidate in this article is a cheater with an AI-generated mask of a different face, and a false professional identity, we share all the made-up resumes, CVs, videos, and photos, to give a sense of how things played out. If you’re currently hiring, or plan to, the nature and sophistication of the fake-applicant scam targeting this startup provides food for thought.

For more tips in detecting fake applicants, you can also check this handy PDF guide, created by the Vidoc engineering team.

1. The first AI faker

Vidoc Security is a security provider offering automated code reviews to detect security issues. The idea for the company came from two security engineers and ethical hackers, Dawid Moczadło and Klaudia Kloc. Previously, they hacked top tech companies like Meta and then disclosed vulnerabilities to those places in order to collect bounties, and get on those companies’ ethical hacking leaderboards.

With the rise of LLMs in the past couple of years, Dawid and Klaudia spotted an opportunity to create a tool that works in the same way as they searched for security vulnerabilities: looking across the broader codebase, checking how components interact, which parts could be insecure, and more. Basically, an LLM can take their expertise about what works to hack well-designed systems, and build a tool with some of the same know-how.

Their idea attracted investors, and Dawid and Klaudia raised a $600K seed round in 2023, and a further $2.5M in seed funding in August 2024. With this seed funding in the bank: the company began hiring. They posted a job ad for a backend engineer, and started to interview candidates. (We share details on the exact hiring funnel and statistics below, in “The hiring funnel”)

One promising candidate was called Makary Krol. His LinkedIn profile is still active:

Below is a step-by-step summary of how the recruitment process for the imposter candidate went, based on Vidoc Security's records, including the bogus resume. By the end of step 5, the team were certain they were the target of a scam.

1. Resume screening: ✅Resume looks solid:

2. First-round screening. ✅⚠️ A 15-30 minute call with Paulina, head of operations. It was a bit odd that the candidate did not speak any Polish, but was based there and graduated from Warsaw University of Technology. He spoke in broken English, and with a very strong accent that sounded Asian, but these weren’t warning big red flags, and the candidate sounded motivated.

3. Hiring manager interview. ✅⚠️ The candidate was clearly well-rounded and a technical screening was the obvious next step. Dawid’s only “yellow flag” was that their communication skills were poor, but he figured a technical interview would be a chance to show their core coding and technical skills.

4. Technical interview. ✅✅The candidate absolutely smashed it, being the first to finish all coding tasks and follow-up questions in the allocated time of 2 hours, which hadn’t happened before. Dawid was surprised by how competent they were at coding and technical problem solving. The coding abilities of this candidate were definitely not fake: they were a seasoned, very capable engineer.

5. Final hiring manager interview. ‼️⚠️ This was a non-technical interview with cofounder, Klaudia, who dug into the specifics of their background and grew suspicious. The candidate gave some details about previous positions, but she increasingly found herself disbelieving their back story and resume, the more time she spent with the candidate. Dawid shared the suspicion and they became certain that the figure on screen was far from what they claimed to be.

By the end of the recruitment process, Vidoc believed they had nearly been played, and had come worryingly close to extending an offer to a fake candidate using a false identity in their documents, and an AI filter to mask their face on screen. However, they had no evidence of this, and didn’t record the interviews, so had only their impressions and notes.

When Dawid recounted this episode to peers, he was met with disbelief. Founder friends – along with most other people – thought the team were overreacting and misguided. Eventually, Dawid stopped sharing the story and began to doubt the team’s suspicions about the candidate.

After the incident, Vidoc added an onsite interview as a final step in the recruitment process, and held a retrospective to figure out how to avoid something similar again. Interestingly, there wasn’t much that could be done about the potential risks of remote interviews being taken by applicants pretending to be someone else.

2. The second AI faker

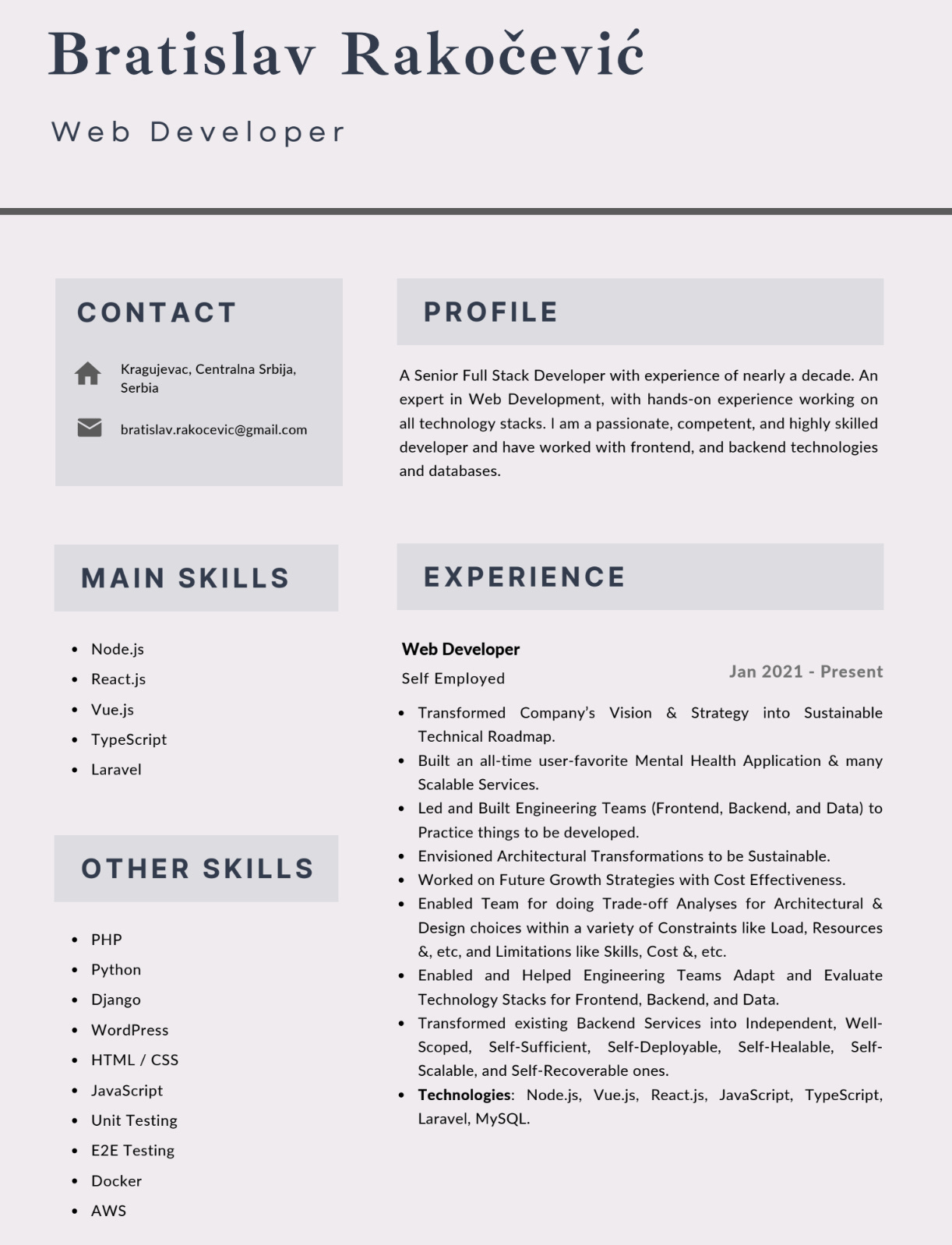

Two months after the suspected fake job candidate episode, another promising applicant appeared, going by the name Bratislav Rakočević, located in Serbia, with a university education there. His LinkedIn was pretty basic:

This application looked good, so the company ran the usual recruitment process, below (including the candidate’s made-up resume):

1. Resume screening: ✅⚠️ Solid on technologies, but with too many buzzwords for their current, self-employed role. Of course, it’s not unusual that some devs don’t thrive at writing resumes. Their credentials merited a call, especially as the “candidate” had “worked” at HubSpot and Woosh:

2. First-round screening. ✅⚠️ Communication was a bit choppy — then again, we’re talking about a non-native English speaker. The candidate did not speak Serbian, despite graduating from the University of Kragujevac, in Serbia. Once again, they sounded motivated, so proceeded to the next round.

3. Hiring manager interview: AI filter exposed‼️‼️Dawid conducted this interview, and immediately felt that something was off about the candidate. First of all, Dawid felt the candidate on the call looked somewhat unlike their low-resolution LinkedIn profile:

As a rule, Vidoc never records interviews, but the team had been burnt once before and had faced scepticism about it from peers afterwards. So he hit record, and asked the candidate to make a simple gesture which bamboozles the current crop of AI filters:

“Can you take your hand and put it in front of your face and cover it partially?”

The candidate refused, so Dawid ended the conversation. Watch the full video of the encounter.

Later, several people pointed out that the AI mask looked uncannily similar to a prominent Polish politician named Sławomir Mentzen:

Looking back on these twin incidents, the team suspects the same individual may have been behind the AI disguises of both fake candidates because:

Their voices sounded almost identical

The second candidate answered questions with surprising confidence – almost like he’d been asked them before

…a hunch that they had spoken before

The AI filter for the second faker was of much lower quality than the first. The first candidate slipped through undetected through several interviews, despite almost certainly using an AI filter as well. Dawid said the second candidate’s filter looked obvious on the screen – but on that occasion the Vidoc Security team were on high alert for any possible deception. It’s worth remembering that AI filters improve with time, so spotting a good one won’t always be easy.

3. How to avoid being tricked by AI candidates

How can tech companies hiring engineers protect themselves, and prevent AI-disguised candidates? Some suggestions:

This is not a drill; take the threat seriously: If you think your company is too small to fall victim, think again. Vidoc Security was just a two-person startup in February 2023 with no funding raised, when it was first targeted. The first fake profile cold emailed them on LinkedIn to ask if they were hiring.

It’s possible there is a large, state-level operation running a pool of bogus applicants who hide behind fake resumes and AI filters in video interviews. If this operation can find the smallest of startups to target, it’s safe to assume they could apply to any other company, as well.

Don’t assume all job seekers on local job boards are genuine. Vidoc posted on local job boards, like the one in Poland. But whoever was behind this operation figured out that it’s profitable to pose as Serbian, Polish, and other eastern European profiles, and to apply via small, low-profile job boards. I didn’t even know about the job board “JustJoinIT” (popular in Poland) but the fakers were already active on it.

Ask candidates to turn off all filters – including background ones. Filters that blur or replace the background are ubiquitous these days. During a video interview, it’s fair to ask a candidate to turn off all filters. After that, if there are still unusual minor details on their face or the background, then it’s safe to assume that a candidate did not turn off their filters. In an interview for a full-remote position, this could be justification enough to terminate the call.

Ask the candidate to do things that AI filters cannot handle – yet, such as:

A face turning side to side

A hand in front of a face

standing up and walking backward from the camera, showing more body detail

In the circumstances, It should be justifiable to run through some or all of these tests, as well as asking for all filters to be turned off. Stu Sjouwerman, CEO of KnowBe4, shared additional details on what his company learned from hiring a North Korean worker with a false identity:

Do not rely on email references alone. For reference checks, also do phone calls or video calls with references.

Absence of a digital footprint is suspicious. If there’s no trace of a candidate anywhere online, it could be a flag.

Use a phone carrier lookup tool before calling a candidate. Many fake candidates provide a VoIP number. Treat any such number as a red flag and refuse to call candidates on it.

Conduct all interviews using video. Fake candidates can slip through written application processes more easily

Expect identity theft. In the case of KnowBe4, the identity of the person they hired was real and stolen, meaning that a background check came back clean.

Put better internal monitoring in place. Monitor access to systems by onboarded employees – and pay extra attention to new joiners.

When suspicious, ask lowkey “cultural” questions. For example, if a candidate claims to be from Seattle but there is reason to suspect otherwise, ask a question like, “'I see you are from Seattle, what's your favorite place to eat, and what do you usually get?” Faking this knowledge in a convincing way is hard for someone who has never been to Seattle, and doesn’t know the local cuisine.

Different shipping address for laptop. If a candidate asks for an onboarding laptop to be shipped to a different address from where they are supposed to be living or working, it’s a red flag.

Additional tips:

Record video interviews and save them for later. AI-disguised candidates might be hard to spot in a live scenario, but analyzing the video later could be helpful. Note that recording the interview will most likely require disclosing this to a candidate, and them agreeing to this.

Get notarized proof of identity. This advice comes from Google, after they saw hundreds of US companies fall victim to North Korean workers by hiring them for tech roles.

Vidoc also created a guide to detect fake candidates in your hiring pipeline: see this PDF document here.

4. Foreign state interference?

So, who or what might be behind these two incidents at Vidoc; a lone individual or individuals, or something else entirely?