Five Days of Chaos at OpenAI: and What Started It

It’s been five incredibly turbulent days at the leading AI tech company, with the exit and then return of CEO Sam Altman. Tensions likely started years ago, when their non-profit charter changed.

👋 Hi, this is Gergely with a bonus, free issue of the Pragmatic Engineer Newsletter. In every issue, I cover topics related to Big Tech and startups through the lens of engineering managers and senior engineers. In this article, we cover two out of seven topics from today’s subscriber-only issue: What is OpenAI, Really? To get full issues twice a week, subscribe:

OpenAI is the clear leader in the Artificial Intelligence (AI) sector, and probably the hottest company in tech, right now. Just last week, we published the first-ever deepdive into the engineering culture at OpenAI, looking at how ChatGPT ships so ridiculously fast. The short of it is that ChatGPT is a one-year-old-startup within the 3-year-old Applied group. OpenAI has high talent density and tight integration between engineering and research. ChatGPT operates like an independent startup, releasing frequently and incrementally.

However, just days after last week’s article, the tech world was shocked as OpenAI suddenly fell into crisis – with the risk it might even cease to exist. Last Friday, the company’s board fired CEO Sam Altman, and cofounder Greg Brockman also quit. By Sunday, employees were revolting and demanding Sam and Greg be brought back. By Monday, OpenAI had hired a new interim CEO, and Altman and Brockman had announced joining Microsoft to head up a newly created AI division. By Monday night, 743 of the 778 OpenAI employees had signed a petition threatening to follow Sam and Greg by joining Microsoft unless OpenAI’s board resigned and Sam returned as CEO. That same day, Microsoft confirmed it was ready to hire all OpenAI staff.

For a few hours, there was a very real possibility that OpenAI would shrivel to 30 employees, with 95% of OpenAI’s staff becoming Microsoft employees overnight.

Finally, on Tuesday night OpenAI’s board announced that an agreement had been reached, where Sam Altman returns as CEO, the board is updated, and things get mostly back to normal.

Last week, Evan Morikawa, who leads around half of OpenAI’s engineers, shared insights about its engineering culture in this newsletter. On Wednesday, he summarized events as “the most insane 100 hours of my career.”

Today, we analyze what happened, what caused this sudden near-death experience for OpenAI, and go into how OpenAI’s odd for-profit inside a nonprofit structure caused conflicts that likely led to last week’s events.

In this issue, we cover:

1. Five days of chaos: the timeline

Friday, 17 Nov: The firing

OpenAI’s board dynamics

Saturday, 18 Nov: fury at Microsoft

Sunday, 19 Nov: efforts to undo the mess

Monday, 20 Nov: events speed up

Tuesday, 21 Nov: breaking point

2. From nonprofit, to making profits part of compensation packages

Let’s jump in:

1. Five days of chaos: the timeline

We’ll use PST (pacific standard time/California time) for all timestamps. OpenAI is headquartered in San Francisco, where events unfolded

Friday, 17 Nov: The firing

Noon (12pm): Sam Altman, CEO of OpenAI and board member, joins a board meeting to which he was invited. At this meeting, he’s sacked, effective immediately. The board has 6 members, including Sam. The board’s chair, Greg Brockman, is not present.

12:19pm: Greg Brockman, cofounder of OpenAI, its president, and chair of the board, gets a message from Ilya Sutskever, cofounder, chief scientist, and fellow board member. Ilya asks Greg for a quick call.

12:23pm: Greg joins a Google meet with the other 4 board members. He’s told he is to be immediately removed from the board, and that Sam has been fired.

OpenAI publishes a blog post at the same time announcing this firing, writing:

“The board of directors of OpenAI, Inc., the 501(c)(3) that acts as the overall governing body for all OpenAI activities, today announced that Sam Altman will depart as CEO and leave the board of directors. Mira Murati, the company’s chief technology officer, will serve as interim CEO, effective immediately. (...)

Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.”

1:46pm: Sam Altman announces on Twitter that his time at OpenAI is over, saying he’ll have more to say about what’s next, later.

4:00pm: Greg Brockman sends a message to the OpenAI team:

“Hi everyone,

I’m super proud of what we’ve all built together since starting in my apartment 8 years ago. We’ve been through tough & great times together, accomplishing so much despite all the reasons it should have been impossible.

But based on today’s news, I quit.”

With each development, the reaction is disbelief inside and outside OpenAI: Sam fired; what? Greg quit; how? After all, Sam Altman was the public face for OpenAI for four years; the leader who championed the wildly successful ChatGPT. Greg had been there since the start of OpenAI and was a popular leader, like Sam.

The story of OpenAI’s board firing Sam Altman became the most upvoted story on Hacker News in the past five years, indicating just how unexpected and impactful it was for the tech community. The story is the third most upvoted of all-time, behind Stephen Hawking’s passing, and the news of Apple rejecting to install a backdoor on iOS that the US government requested.

OpenAI’s board dynamics

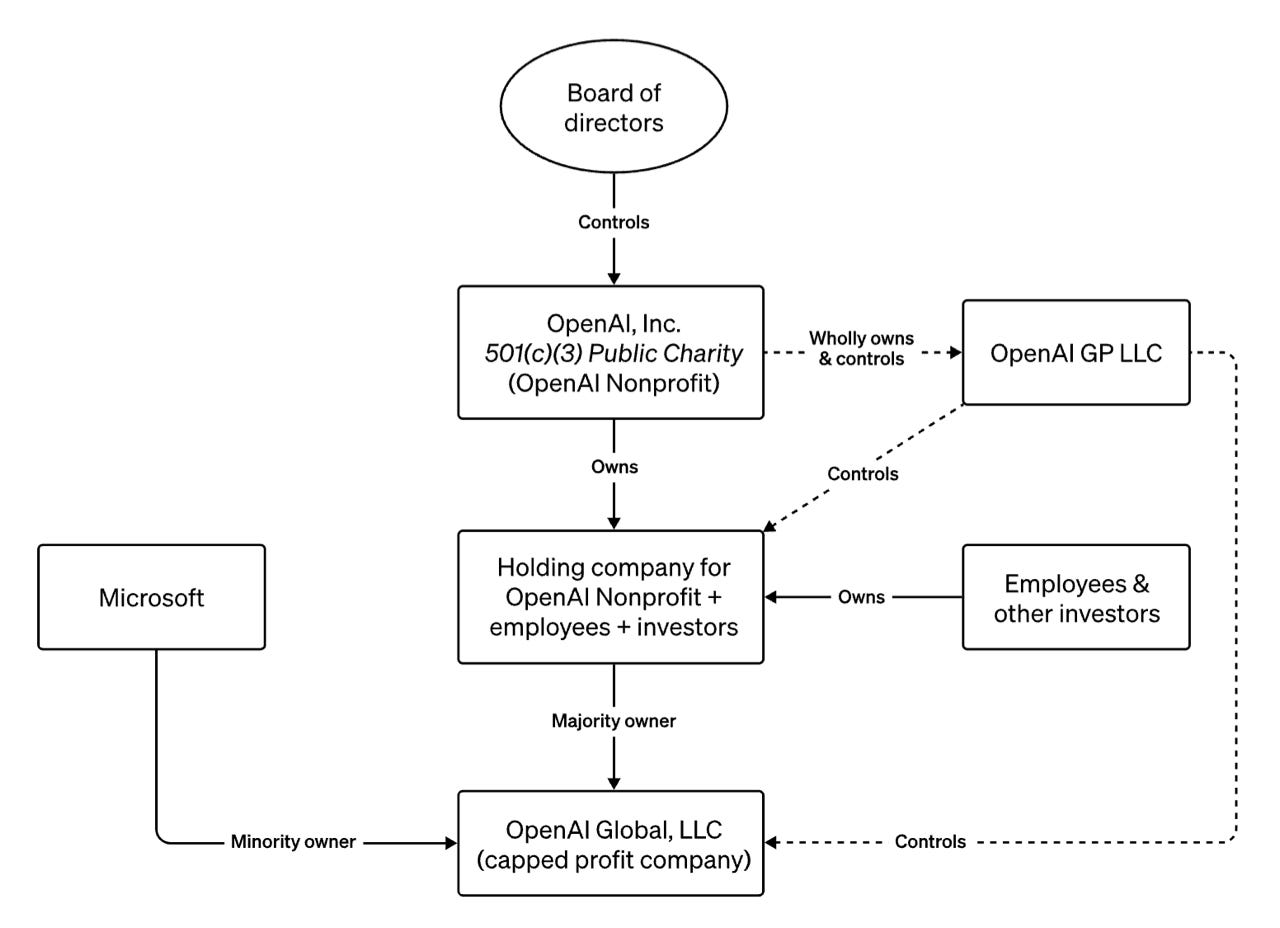

So how did this firing take place, and what about the board which executed it? To understand this, here’s an overview of OpenAI’s admittedly exotic corporate structure, from its website:

OpenAI started as a nonprofit in 2015, and the board controls this nonprofit. In 2019, a “capped profit” company was created, which we’ll go into later. The nonprofit owns and controls this for-profit part of OpenAI, as well. Sam Altman was CEO of the OpenAI nonprofit, and the board controls the nonprofit.

OpenAI’s board of directors consisted of six people before noon on Friday, and looked pretty well balanced; with an equal number of employees represented as there were non-employee board members:

Greg Brockman (cofounder, board chairman and president) – employee

Sam Altman (CEO) – employee

lya Sutskever (cofounder, chief scientist) – employee

Adam D’Angelo (cofounder and CEO of Quora) – non-employee

Helen Toner (director at Georgetown's Center for Security and Emerging Technology) – non-employee

Tasha McCauley (cofounder and and former CEO of a robotics company, and married to Joseph Gordon-Levi)

This dynamic changed rapidly, when four board members ganged up to remove the other two:

Interestingly, the board acted without the chair, which could raise some governance questions. Also, we later learned that OpenAI’s biggest investor, Microsoft – which has invested $10B in January 2023 – was not notified of the firing in advance.

Saturday, 18 Nov: fury at Microsoft

On Saturday, confusion reigned. The board wrote: “[Sam] was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities.” What did this vague statement really mean? Had Sam hidden essential details, or withheld vital information? No such details were released.

What did emerge, however, were details on who organized this coup, and Microsoft’s anger about it. From Ars Technica:

“The move [firing] also blindsided key investor and minority owner Microsoft, reportedly making CEO Satya Nadella furious. As Friday night wore on, reports emerged that the ousting was likely orchestrated by Chief Scientist Ilya Sutskever over concerns about the safety and speed of OpenAI's tech deployment. (...)

Internally at OpenAI, insiders say that disagreements had emerged over the speed at which Altman was pushing for commercialization and company growth, with Sutskever arguing to slow things down. Sources told reporter Kara Swisher that OpenAI's Dev Day event on November 6, with Altman front and center in a keynote pushing consumer-like products, was an ‘inflection moment of Altman pushing too far, too fast.’”

OpenAI staff signals strong public support for Altman. Around 9pm, Altman tweets: “i love the openai team so much.” In response, hundreds of OpenAI staff respond with a heart emoji, indicating their support. It’s rumored those responding could be ready to quit OpenAI to follow Sam wherever he goes next. Interim CEO Mira Murati also responds with a heart, indicating she is with “team Sam:”

This event was the first public indication of employees’ overwhelming support for Sam and Greg.

Sunday, 19 Nov: efforts to undo the mess

Investors – especially Microsoft – are unhappy, and start an attempt to reinstate Altman as CEO. As Microsoft is a massive investor, it’s reasonable to expect the company has a big say, and can get Sam Altman back to leading the company before the markets open on Monday. The markets matter to Microsoft because without a resolution, its stock price could lose value thanks to its financial links to OpenAI.

1pm: Altman enters OpenAI’s offices to meet the board and discuss his possible return. Before going into the meeting, he posts on social media, sending a message to the board that it’s the first and last time he’s taking a guest badge at the company he ran for four years:

Key staff inside OpenAI who are pushing for Altman’s return to the CEO role, include interim CEO, Mira Murati, chief strategy officer, Jason Kwon, and chief operating officer, Brad Lightcap, as per Bloomberg.

5pm: the deadline for the board to agree to Altman’s demands. Nothing is announced

9pm: OpenAI gets another new CEO. In a further unexpected twist, the board does not announce Altman will return as CEO as investors have pushed for, but does name an new interim CEO; Twitch cofounder, Emmett Shear. He later shares that he made the decision within a few hours of getting the call from the board.

Before offering the CEO role to Emmett, the OpenAI board offered it to former GitHub CEO, Nat Friedman, and to Scale AI CEO, Alex Wan. Both declined. The board very clearly did not want Altman and was scrambling to find a new interim CEO because the first interim, Murati, also wanted Altman back:

Employees refuse to attend an emergency all-hands. Following Shear’s appointment as CEO, a last-minute all-hands meeting is organized. Staff refuse to attend, and several people responded with a “fuck you” emoji, as per The Verge.

11:53pm: Sam to join Microsoft? Satya Nadella drops another bomb. Responding to OpenAI’s CEO announcement, the Microsoft CEO tweets that Sam and Greg are joining Microsoft, “together with their colleagues.” Remember those hearts replies under Altman’s earlier tweet? The signals are that a brain drain from OpenAI to Microsoft is imminent:

Monday, 20 Nov: events speed up

5am: Ilya Sutskever, supposedly the organizer behind the coup, announces a full-reverse:

This means that on the remaining 4-person board, only 3 members now back the coup, with one, Sutskever, on “team Sam.” Could this mean there’s hope of reversing the board’s actions? What about Microsoft’s CEO tweeting that Sam and Greg are to become Microsoft employees?

An employee revolt gathers momentum, and threatens OpenAI’s existence. Around 1:30am, employees started a petition, threatening:

That all the undersigned may choose to leave OpenAI and join Microsoft

… unless all current board members resign, the board appoints two new independent directors like Bret Taylor and Will Hurd, and reinstates Altman and Brockman

At 2am, former interim CEO Mira Murati tweeted “OpenAI is nothing without its people” – and yet again, hundreds of OpenAI staff copy-post this phrase as a sign of defiance. Those posting this tweet also sign the petition.

By 6am, 505 from 778 employees have signed the petition, with doing so in the middle of the night. Among the signatories is board member, Ilya Sutskever. This is 65% of staff threatening to quit.

By 11am, this number is at 700 (90%.) By noon, it’s 715 (92%.) And by 3pm it’s 743 (95%.) Employees make it clear they’re ready to walk, placing the board under extra pressure to do something.

On Monday, Satya Nadella does a rapid media round. Due to the turmoil, current OpenAI customers are nervous and could consider moving to Anthropic, Google, Cohere, or other AI competitors. In an effort to calm things, Nadella appears on CNBC, Bloomberg TV, and the “On with Kara Swisher” podcast. In these appearances, it’s apparent that Nadella didn’t want OpenAI staff to join Microsoft, but that if OpenAI could not solve its problems, this would be an option. Answering a question, Nadella admitted Altman and Brockman were not Microsoft employees – at least not yet.

Nadella’s goal seemed to be exactly what OpenAI employees wanted: the board gone, Sam and Greg reinstated, and Microsoft continuing to be a strategic partner to OpenAI.

Salesforce’s cofounder and CEO makes a bold offer to poach OpenAI staff. At noon, Marc Benioff tweets that Salesforce will “match any OpenAI researcher who has tendered their resignation full cash & equity OTE to immediately join our Salesforce Einstein Trusted AI research team under Silvio Savarese.”

This is a very generous offer, as it means OpenAI staff who have been offered large amounts of PPUs (profit participation units) which is not liquid – and whose value is at risk due to all the uncertainty – would get the same amount in cash or Salesforce stock!

Benioff uses this opportunity to advertise Saleforce’s Einstein platform, writing that “Einstein is the most successful enterprise AI Platform, completing 1 Trillion predictive & generative transactions this week!” After days of chaos at OpenAI, this offer feels like a fair shot, and also a reminder to OpenAI’s board that it’s not just Microsoft they need to worry about.

Tuesday, 21 Nov: breaking point

The board has still not responded to the staff petition.

6am: Microsoft CTO, Kevin Scott, reassures all OpenAI staff that Microsoft will have a position for them and will match their current compensation. This message could have been a response to Salesforce’s aggressive hiring tactic.

9am: News about Sam’s possible return to OpenAI surfaces yet again. Apparently, interim CEO, Emmett Shear, told the board he will quit if they cannot provide evidence of wrongdoing. It’s been four days, and there are still no details on why Sam was fired. If the new CEO cannot find compelling reasons, the board could find itself in trouble.

10pm: It’s over. An agreement is reached: Altman returns to OpenAI as a CEO. A new board is formed, with Bret Taylor (former CTO of Facebook, Chair of the board,) Larry Summers (formerly president of Harvard University), and Adam D'Angelo (existing board member, cofounder and CEO of Quora).

The OpenAI team celebrates in their San Francisco headquarters, and now has a peaceful Thanksgiving to look forward to.

How did the world’s most envied tech company, valued around $86B, plunge into chaos for five days straight? Perhaps all of this was much more than the coup that it might look from the outside, and the roles of “good” (‘team Sam’) and “bad” (the board) might not be as clear-cut as they seem.

Let’s go back a few years, when the seeds of this conflict were likely sown.

2. From nonprofit, to making profits part of compensation packages

When OpenAI was founded in 2015, the company introduced itself like this:

“OpenAI is a non-profit artificial intelligence research company. Our goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return. Since our research is free from financial obligations, we can better focus on a positive human impact.”

This is a worthy mission and refreshingly different from the for-profit model that’s common at most venture-funded tech companies. But over time, this approach has been predictably watered down to what is closer to a for-profit operation.

The “capped profit” change. On 11 March 2019, the company announced it had created a “capped-profit” company. OpenAI cofounders Greg Brockman and Ilya Sutskever explained it like this:

“We want to increase our ability to raise capital while still serving our mission, and no pre-existing legal structure we know of strikes the right balance. Our solution is to create OpenAI LP as a hybrid of a for-profit and nonprofit—which we are calling a “capped-profit” company.

The fundamental idea of OpenAI LP is that investors and employees can get a capped return if we succeed at our mission, which allows us to raise investment capital and attract employees with startup-like equity. (...) Returns for our first round of investors are capped at 100x their investment.”

This so-called “capped profit” is unlikely to be a “cap” for practical purposes. It could be tempting to think that OpenAI’s approach of capping profit at 100x of the investment amount, is somehow noble or selfless. However, in practice, there’s little meaningful difference between capping profits at this level and having no cap. This is for two reasons:

OpenAI needs a lot of capital before it can generate profits. In this sense, the company is not too dissimilar from capital-intensive companies like ridesharing giant Uber, which has raised about $25B in funding, and has generated less than $1B in cumulative profits over 12 years. Uber is worth $114B at time of publication, but the company has generated less than 1x the profit from what it has raised!

OpenAI has a “cap” of at least $1,200B in profits! OpenAI raised $12.3B from investors as per Dealroom: which means that the “profit cap” of the company is around $1,200B, which is $1.2T. To put this in perspective: Amazon has generated at a total of $100B in profits since being founded in 1994, Google earned $400B profit throughout its 25-year existence, while Microsoft has generated a total profit of $534B in the past quarter century. Apple is one of the most profitable companies of all time, and not even Apple has generated as much profit since being founded in 1976! Total profits for Apple are in the region of $700B over nearly 50 years.

To be clear, there is nothing wrong with a for-profit approach; we’ve seen many of tech’s biggest innovations come from for-profit companies! I cite the above examples to illustrate why OpenAI’s cap is essentially meaningless and illusory.

On the same day this for-profit shift was announced, Altman – former president of startup accelerator Y Combinator – joined OpenAI as the CEO.

Prior to this setup, employees at OpenAI did not receive equity, only a base salary. OpenAI introduced equity compensation also unique to itself following the change to a “capped” profit model.

Median compensation at OpenAI is $905,000/year, as per Levels.fyi data, based on 20 data points. This is very high median compensation even within Big Tech and underscores why Salesforce offering to match this amount in liquid compensation was a very generous deal.

Profit participation units (PPUs) are an interesting equity structure, and how OpenAI issues a special kind of equity to its staff. This is how it works, also revealed by Levels.fyi:

Employees get a healthy base salary. For L5 levels – which maps roughly to staff engineer at the likes of Google – the median is around $300,000/year

An L5 engineer received PPUs valued at $2M, vesting over 4 years: so $500,000 per year.

PPUs entitle their holders to a percentage of profits generated by OpenAI. For example, if OpenAI issues a total of 1,000 PPUs, and an employee has vested a total of 10 of these (1% of them,) then they are entitled to 1% of the profits. OpenAI valuing PPUs at $2M means they expect that those units issued will yield $2M in profit – assuming the leadership’s profit projections are accurate.

Compensation at OpenAI is based on the expectation of healthy profits, in contrast to the nonprofit core of the company. There is nothing wrong with aiming to generate profits; the promise of profits is what motivates investors. It is also a major reason why employees join OpenAI: if the company never generated profits, the equity portion of their compensation would be worth zero. And if PPUs are worthless, employees would take a major pay cut compared to other Big Tech companies.

However, as OpenAI now must generate profits to keep employees and investors happy, this line of the company’s introduction seems at risk:

“Since our research is free from financial obligations, we can better focus on a positive human impact.”

OpenAI clearly wanted to “have its cake and eat it.” The changes made in 2019 attempted to inject a for-profit incentive into the nonprofit, which created some obvious conflicts:

Wanting to stay a nonprofit to pursue its humanity-first goals…

… but also putting profit-making first, in order to attract investors and hire world-class talent

Stating: “Our mission is to ensure that artificial general intelligence benefits all of humanity…”

… yet the majority of employees’ total compensation is tied to the company generating profits, which means selling services at a premium to those that are willing and able to afford these services, as opposed to “benefiting humanity” in general

The contradictions also apply to safety and speed. In order to benefit humanity, moving slower and safer is the sensible approach. However, to generate profits, OpenAI needs to be first to market, and first to monetize. And OpenAI, indeed, has moved fastest, with the ChatGPT product taking the world by storm. The company claims to prioritize safety equally with speed but internally, not everyone sees it like this.

These were two one out of the seven topics covered in this week’s The Pulse. The full edition further analyzes the events at OpenAI, also covering:

ChatGPT too successful, too fast?

Microsoft’s interest in a standalone OpenAI

OpenAI’s CEO: does something feel off?

OpenAI’s board: plenty of questions

What is OpenAI, anyway?