Microsoft is dogfooding AI dev tools’ future

Impressions from a week in Seattle, at Microsoft’s annual developer conference. Microsoft is eating its own dogfood with Copilot – and it’s not tasty

Last week, I was in Seattle and stopped by at Microsoft’s annual developer conference, BUILD. I spent a bunch of time with GitHub CEO, Thomas Dohmke, interviewed Scott Guthrie, who leads Microsoft’s Cloud+AI group, and chatted with Jay Parikh, who heads up a new org called CoreAI. Interviews with Thomas and Scott will be published as podcasts soon.

I also talked extensively with developers at BUILD, and with engineering leaders at tech companies in the city. Today’s issue is a roundup of everything I heard and learned. We cover:

AI, AI, AI. It’s all about artificial intelligence at Microsoft – to the point where some attendees at BUILD were tired of hearing about it!

Copilot as “peer programmer.” Many AI dev tools startups are building replacements for software engineers, but Microsoft is doing the opposite. Copilot is positioned to keep developers in charge, while increasing productivity.

AI agents for day-to-day work. Microsoft showed off a series of demos of Copilot opening PRs, taking feedback, and acting on code reviews for simple, well-defined tasks.

Dogfood that’s not tasty: Copilot Agent fumbles in real world with .NET. Microsoft is experimenting with Copilot on the complex .NET codebase, meaning everyone sees when this agent stumbles – often in comical ways. Still, I appreciate the transparency by Microsoft, which is in contrast to other agentic AI dev tools.

The real goal: staying the developer platform for most devs. Everything Microsoft builds seems to be about staying as the developer platform of choice with GitHub and Azure, while giving space for other startups to build tools on top of this platform.

More impressions. Not joining the AI hype is paying off for some companies, most places are hiring more, not fewer, devs, hiring junior engineers could be a smart strategy now, and more.

I attended BUILD on my own dime; neither Microsoft nor any other vendor paid for my travel, accommodation, and other expenses (they did offer). More in my ethics statement.

1. AI, AI, AI

The only theme of Microsoft's event was AI. Indeed, I can barely think of a major announcement from the tech giant that was not GenAI-related. Even when Microsoft unveiled improved security infrastructure, it was for AI applications. The one exception was the open sourcing of the Windows Subsystem for Linux (WSL).

Some notable announcements:

“The agent factory” vision. Microsoft’s stated goal is to become an “AI agent factory”, providing tools for companies to build AI agents, and being the platform which runs these. Azure AI Foundry is a product positioned for this. Microsoft clearly sees a large business opportunity, estimating there will be around 1.3 billion agents in a few years. Personally, this seems like a typical “say a big number to show there’s money to be made” kind of statement. After all, what does “agent” even mean: is it a running agent, code for an AI agent, and if I start 4 of the same agents in parallel, then how many is that?

Open sourcing Copilot Chat extension: the open sourced extension will use the permissive MIT license, making it much easier for developers and startups to create Copilot-like extensions, or to improve Copilot itself.

MCP support. Microsoft is doubling down on adding support for the Model Context Protocol to expand agent capabilities. We previously did a deepdive on MCP with one of its creators.

Grok coming to Azure. Grok is xAI’s large language model (LLM), which has previously only been available on the social network X.

NLWeb: short for Natural Language Web. An open source project by Microsoft to turn a website into an AI app. My take is that this project feels pretty abstract, like it’s Microsoft’s way to encourage more websites to create “AI interfaces” for AI agents to consume. One question is how widely-adopted this approach will be.

AI security and compliance with a new data security SDK called Microsoft Purview, that can be embedded into AI apps. This SDK can block LLMs’ access to sensitive data, and also allows auditing data to be accessed: which is something that will become more important for larger companies which need to have audit trails of developers or LLMs which accessed sensitive data.

It was my first visit to BUILD, and I talked with engineers who have been coming for years. They felt this was the first BUILD at which a single topic – in this case, AI – has dominated everything, and I heard a few complaints about the narrow focus. These reactions reminded me of Amazon’s annual event last year, which caused similar grumbles. In The Pulse 101, AWS Serverless Hero Luc van Donkersgoed said:

“AWS’ implicit messaging tells developers they should no longer focus on core infrastructure, and spend their time on GenAI instead. I believe this is wrong. Because GenAI can only exist if there is a business to serve.”

I think I now better understand why Microsoft and other Big Tech giants are going so big on AI. There was nothing but AI positivity from every Microsoft presenter, but nobody mentioned trade-offs and current shortcomings, like hallucinations, and getting stuck on tasks. But these folks are very much aware of the current limitations and developers’ frustrations, so why the blanket optimism?

Basically, there’s little downside in being over-optimistic about the impact of AI, but there’s a major downside in being a little too pessimistic. If optimism is overstated, then people just spent too much time and effort on tools that get used less than predicted.

However, if there’s too much pessimism and it leads to inaction, then startups coming from nowhere can become billion-dollar businesses and rivals. This is exactly what happened with Google and Transformers: the search giant invented Transformers in 2017, but didn’t really look into how to turn it into products. So, a small startup called OpenAI created ChatGPT, and now has more than 500 million weekly active users, and a business worth $300B. Google is playing catchup. It was a costly mistake to not be optimistic enough about the impact of Transformers and AI!

My hunch is that Microsoft does not want to make the same error with developer tools. On that basis, take projections from the company’s leadership with a pinch of salt, and know that being optimistic is just what they do, but that there’s also a sensible rationale for this approach.

2. Copilot as “peer programmer”

In the past year, many startups have made bold promises of building tools to replace developers, such as Devin, the world’s first “AI engineer”, Magic.dev, which aims to build a “superhuman software engineer”, and Google, whose chief scientist, Jeff Dean, recently shared that he expects AI systems to operate at the level of a “junior engineer” by 2026.

In all cases, the message to business decision makers seems clear: spend money on AI and you won’t have to hire engineers because the AI will be just as good, if not better, than devs. I previously analyzed how startups like Magic.dev and Devin might have seen no other option for raising funding than to make bold claims, since GitHub Copilot has already won the “copilot” category. These startups resorted to marketing stunts to claim they have (or will have) a product that’s equal to human developers.

In contrast, Microsoft is not talking about replacing developers. It says GitHub Copilot – and the latest version of the product called “Coding agent” – are tools to partner with developers, akin to a peer programmer.

At BUILD, Microsoft showed a more advanced demo of how their latest tools work across the development lifecycle – from planning, all the way to deployment and oncall – than I’ve seen from anyone else.

3. AI agents for day-to-day work

At BUILD, Microsoft stressed that all its demos were real, live, and used the tools that all developers can access immediately. This felt like a stab at Apple, which faked its demo for Apple Intelligence, and possibly a reference to Devin, whose claim that its “AI Engineer” could complete real tasks on Upwork wasn’t correct. I also take it as a sign that Microsoft understands that people can be tricked once with puffed-up demos, but that there’s a longer term cost, and the company would rather do something blander which works, than to set unrealisable expectations.

The live demo featured an imaginary project to put together an events page for the conference. It was not too ambitious or complex, and showed off that Copilot is integrated into far more places than before. Jay Parthik (EVP of CoreAI at Microsoft), and Jessica Deen (Staff Developer Advocate at GitHub) worked together to simulate a mini dev team, whose third “member” was Copilot. The demo showed examples of the type of collaboration devs can do with the preview version of GitHub Copilot in Visual Studio:

Information gathering

Jay acted as a new joiner to the team, and asked Copilot for context on a new codebase. This is a pretty basic GenAI use case, which also seems a useful one.

Create a PR

Jay asked the agent to improve the Readme of the project, and the agent responded by opening a PR based on this prompt. Important context that the GitHub UI clearly shows that the agent is working on behalf of a developer:

I want to highlight the wording used by GitHub because it’s significant:

Unlike many startups that treat AI agents as “autonomous” tools that do work on their own, with human devs left to clean up the mistakes, Copilot makes some things clear:

Copilot follows instructions it’s given

A Copilot task launched by a dev is the responsibility of that dev

Copilot is best treated as something to delegate to

The dev is responsible for merging code and for any regressions or mistakes. So, delegate smartly, and check Copilot’s work!

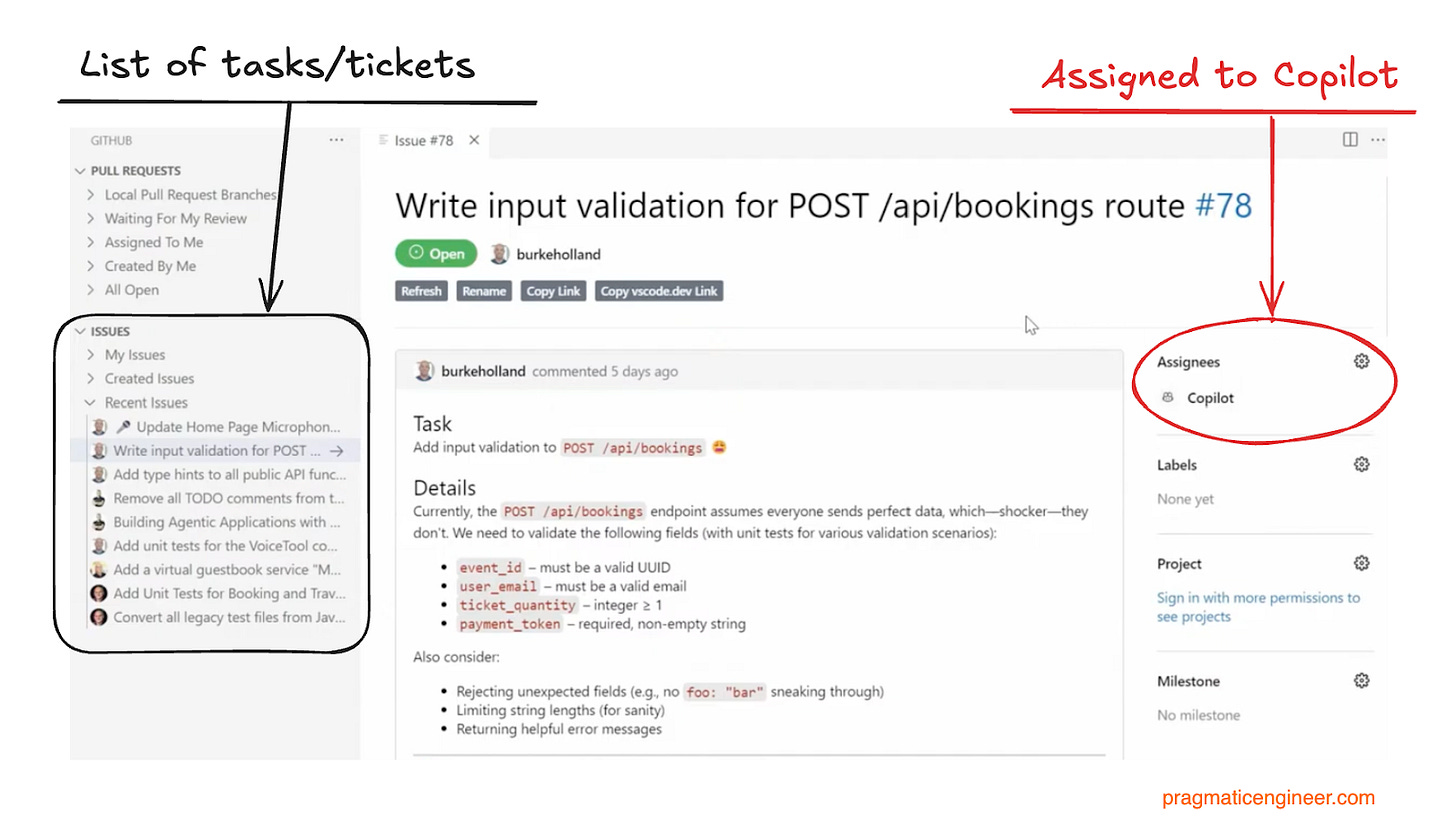

Assign a ticket

You can assign a ticket to the Copilot Agent, and the agent starts work asynchronously. The outcome is usually a PR that needs to be reviewed.

Follow written team coding guidelines

The coding agent follows coding guidelines defined in copilot-instructions.md. This is a very similar approach to what Cursor does with Cursor.md: it’s a way to add “persistent” context for the agent to use before runs.

Connect to Figma and other tools using MCP

Microsoft added support for the Model Context Protocol (MCP), which allows adding tools like GitHub, Figma, or databases to both the IDE (VS Code), or for the agent to use. This means the agent can be pointed to a Figma file, told to implement it using e.g. React, and it does this:

Draft commit messages

Commit messages usually summarize your work, and AIs are pretty good at summarizing things. It’s no surprise Copilot does well at summarizing the changes into a commit message. As a developer, you can change this, but it gives a good enough draft, and saves a lot of effort.

Act on code review feedback

When the agent submits a pull request, it will not act on comments added to it. This is not too surprising as the commit message will be yet another input for the agent, but Microsoft did a nice job in integrating it into the workflow, and showing how these agents can use more tools. For example, here’s a comment instructing the agent to add an architecture diagram about the structure of the code, using a specific library (Mermaid.js):

Upon adding the comment, the agent picks it up immediately and kicks off work, asynchronously:

And it updates the PR by adding a diagram, in this case. We can imagine how this works similarly with other comments and change requests:

Principles of using agents

From this presentation, there are signs of how Microsoft sees Copilot being used:

“Trusty sidekick.” The developer is in charge: Copilot is a buddy they can offload work to, which the dev will need to validate

Offload trivial, time-consuming work. As Jessica put it: “I can let GitHub Copilot Coding Agent handle things that drain my will to code, and focus on real product work.”

Use “AI instructions” in the codebase: define context in a copilot-instructions.md file, similarly to other agents, such as how Cursor works with cursor.md)

All this makes sense. My only addition would be to not forget the obvious fact that this “sidekick” is not human, and does not “learn” from interactions. I keep making this error when I use agentic mode in Windsurf or Cursor: even after solving a problem with the tool, like having it query a database table after giving it guidance, the tool “forgets” all this detail. These tools have limited context, and unlike humans who (usually) learn from previous actions, this is not currently true of AI agents.

Demos that feel artificial

There are many things I like about what Microsoft showed on stage:

Real demos, done live

Making it clear that devs delegate work to the AI, and remain responsible for the work

Not only focusing on coding, but also on things like code review

The whole workflow feels well thought out, and works nicely

However, the demo itself was underwhelming by a tech company of Microsoft’s stature.

It was about building an events site without too many requirements; basically, something the marketing team could do with a website builder. Honestly, the demo used the type of site which in-house devs are unlikely to work on.

Also, the demo felt a bit unrealistic while it showcased the agent’s functionality. On one hand, this is the nature of demos, right? But on the other, they omit the reality of working on a larger, often messy codebase, within a larger team.

To be fair, these kinds of demos would have been impossible even a year ago, simply because AI models were less capable. Microsoft did a good job of integrating the agent with a sensible UI, and using increasingly better models to show that agents can take on simpler work and deliver acceptable results. I want to stress that the output I observed was okay, but I couldn’t call it standout. What Microsoft demoed can be one-shotted these days with a single prompt by tools like Lovable, bolt.new, Vercel v0, or similar.

To its credit, Microsoft did real demos, but used overly-simple scenarios. The project was a web-based one – the environment in which LLMs are strongest – all the tickets were very well-defined, the codebase was simple – and the demo was well rehearsed.

4. Dogfood that’s not tasty: Copilot Agent fumbles in real world with .NET

To its credit, Microsoft is dogfooding Copilot themselves, and they are doing this in the open. The .NET team has been experimenting with using Copilot Agent for this complex and large project. This codebase is not easy to work on, even as an experienced engineer, and Copilot lags behind the capabilities of experienced engineers – heck, sometimes even junior ones!