Resiliency in Distributed Systems

Two chapters from the book Understanding Distributed Systems by Roberto Vitillo

👋 Hi, this is Gergely with a bonus, free issue of the Pragmatic Engineer Newsletter. In every issue, I cover challenges at big tech and high-growth startups through the lens of engineering managers and senior engineers. To get in-depth articles every week, subscribe here:

Understanding the ins and outs of distributed systems is important for both backend engineers and for anyone working with large-scale systems. Large-scale systems can mean systems with high load and high queries per second (QPS), storing a large amount of data, or ones built with low latency and high reliability. These systems are pretty common across both Big Tech and high-growth startups.

One of the most interesting books I’ve found on this topic is Understanding Distributed Systems. The book was written by Roberto Vitillo, who was a Senior Staff engineer at Mozilla, then a Principal Engineer at Microsoft. The second edition of this book was released in February of this year.

The book is structured in five parts:

Communication. Reliable links, secure links, discovery, APIs.

Coordination. System models, failure detection, time, leader election, replication, coordination avoidance, transactions.

Scalability. HTTP caching, content delivery networks, partitioning, file storage, data storage, caching, microservices, control panes and data panes, messaging.

Resiliency. Common failure causes, redundancy, fault isolation, downstream resiliency, upstream resiliency.

Maintainability. Testing, continuous delivery and deployment, monitoring, observability, and manageability.

I like how the book works its way from the theory needed to understand distributed systems - communication and coordination - to practical topics like scalability and resiliency. The book closes with topics on maintainability, which is an area I found surprisingly little focus with most books.

I reached out to Roberto asking if he’d be open to sharing a few chapters of the book with newsletter readers, and Roberto agreed to do so. I chose two chapters on resiliency, from Part 4. If you’d like to dive deeper into other topics, you can buy the e-book on Roberto’s website, or the print book off Amazon.

In this excerpt, we cover:

1. Downstream resiliency

Timeout

Retry: exponential backoff, retry amplification

Circuit breaker

2. Upstream resiliency

Load shedding

Load leveling

Rate limiting: single process and distributed implementations

Constant work

Note that - as always - no links in this newsletter are affiliates and I have not been paid to endorse or recommend this book. More in my ethics statement.

This article is too long to fit in one email. Read the full article online:

1. Downstream resiliency (Chapter 27)

Now that we have discussed how to reduce the impact of faults at the architectural level with redundancy and partitioning, we will dive into tactical resiliency patterns that stop faults from propagating from one component or service to another. In this chapter, we will discuss patterns that protect a service from failures of downstream dependencies.

Timeout

When a network call is made, it’s best practice to configure a timeout to fail the call if no response is received within a certain amount of time. If the call is made without a timeout, there is a chance it will never return, and as mentioned in chapter 24, network calls that don’t return lead to resource leaks. Thus, the role of timeouts is to detect connectivity faults and stop them from cascading from one component to another. In general, timeouts are a must-have for operations that can potentially never return, like acquiring a mutex.

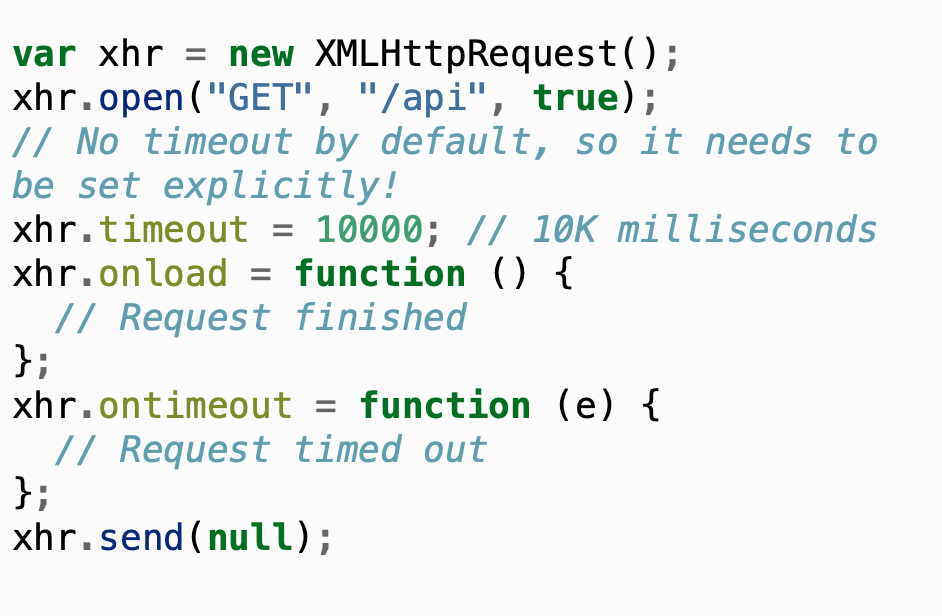

Unfortunately, some network APIs don’t have a way to set a timeout in the first place, while others have no timeout configured by default. For example, JavaScript’s XMLHttpRequest is the web API to retrieve data from a server asynchronously, and its default timeout is zero, which means there is no timeout:

The fetch web API is a modern replacement for XMLHttpRequest that uses Promises. When the fetch API was initially introduced, there was no way to set a timeout at all. Browsers have only later added support for timeouts through the Abort API. Things aren’t much rosier for Python; the popular requests library uses a default timeout of infinity. And Go’s HTTP package doesn’t use timeouts by default.

Modern HTTP clients for Java and .NET do a better job and usually, come with default timeouts. For example, .NET Core HttpClient has a default timeout of 100 seconds. It’s lax but arguably better than not having a timeout at all.

As a rule of thumb, always set timeouts when making network calls, and be wary of third-party libraries that make network calls but don’t expose settings for timeouts.

But how do we determine a good timeout duration? One way is to base it on the desired false timeout rate. For example, suppose we have a service calling another, and we are willing to accept that 0.1% of downstream requests that would have eventually returned a response time out (i.e., 0.1% false timeout rate). To accomplish that, we can configure the timeout based on the 99.9th percentile of the downstream service’s response time.

We also want to have good monitoring in place to measure the entire lifecycle of a network call, like the duration of the call, the status code received, and whether a timeout was triggered. We will talk more about monitoring later in the book, but the point I want to make here is that we have to measure what happens at the integration points of our systems, or we are going to have a hard time debugging production issues.

Ideally, a network call should be wrapped within a library function that sets a timeout and monitors the request so that we don’t have to remember to do this for each call. Alternatively, we can also use a reverse proxy co-located on the same machine, which intercepts remote calls made by our process. The proxy can enforce timeouts and monitor calls, relieving our process of this responsibility. We talked about this in section 18.3 when discussing the sidecar pattern and the service mesh.

Retry

We know by now that a client should configure a timeout when making a network request. But what should it do when the request fails or times out? The client has two options at that point: it can either fail fast or retry the request. If a short-lived connectivity issue caused the failure or timeout, then retrying after some backoff time has a high probability of succeeding. However, if the downstream service is overwhelmed, retrying immediately after will only worsen matters. This is why retrying needs to be slowed down with increasingly longer delays between the individual retries until either a maximum number of retries is reached or enough time has passed since the initial request.

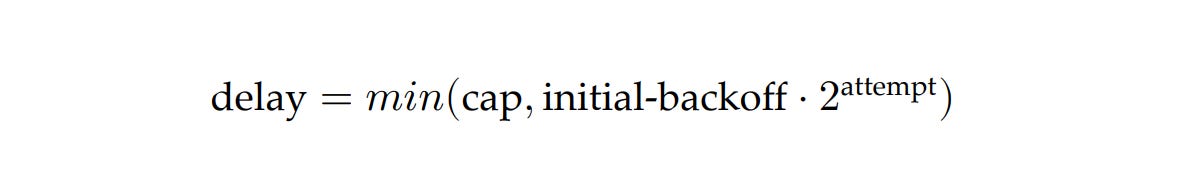

Exponential backoff

To set the delay between retries, we can use a capped exponential function, where the delay is derived by multiplying the initial backoff duration by a constant that increases exponentially after each attempt, up to some maximum value (the cap):

For example, if the cap is set to 8 seconds, and the initial backoff duration is 2 seconds, then the first retry delay is 2 seconds, the second is 4 seconds, the third is 8 seconds, and any further delay will be capped to 8 seconds.

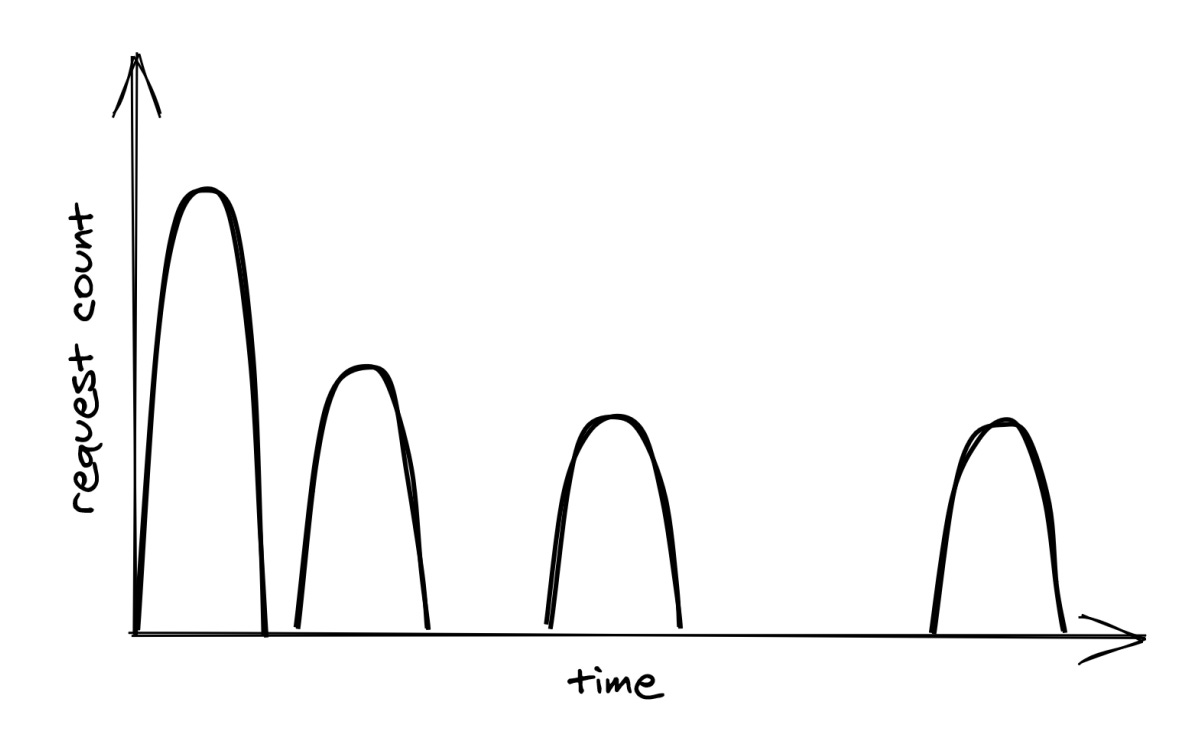

Although exponential backoff does reduce the pressure on the downstream dependency, it still has a problem. When the downstream service is temporarily degraded, multiple clients will likely see their requests failing around the same time. This will cause clients to retry simultaneously, hitting the downstream service with load spikes that further degrade it, as shown in Figure 27.1.

To avoid this herding behavior, we can introduce random jitter into the delay calculation. This spreads retries out over time, smoothing out the load to the downstream service:

Actively waiting and retrying failed network requests isn’t the only way to implement retries. In batch applications that don’t have strict real-time requirements, a process can park a failed request into a retry queue. The same process, or possibly another, can read from the same queue later and retry the failed requests.

Just because a network call can be retried doesn’t mean it should be. If the error is not short-lived, for example, because the process is not authorized to access the remote endpoint, it makes no sense to retry the request since it will fail again. In this case, the process should fail fast and cancel the call right away. And as discussed in chapter 5.7, we should also understand the consequences of retrying a network call that isn’t idempotent and whose side effects can affect the application’s correctness.

Retry amplification

Suppose that handling a user request requires going through a chain of three services. The user’s client calls service A, which calls service B, which in turn calls service C. If the intermediate request from service B to service C fails, should B retry the request or not? Well, if B does retry it, A will perceive a longer execution time for its request, making it more likely to hit A’s timeout. If that happens, A retries the request, making it more likely for the client to hit its timeout and retry.

Having retries at multiple levels of the dependency chain can amplify the total number of retries — the deeper a service is in the chain, the higher the load it will be exposed to due to retry amplification (see Figure 27.2).

And if the pressure gets bad enough, this behavior can easily overload downstream services. That’s why, when we have long dependency chains, we should consider retrying at a single level of the chain and failing fast in all the others.

Circuit breaker

Suppose a service uses timeouts to detect whether a downstream dependency is unavailable and retries to mitigate transient failures. If the failures aren’t transient and the downstream dependency remains unresponsive, what should it do then? If the service keeps retrying failed requests, it will necessarily become slower for its clients. In turn, this slowness can spread to the rest of the system.

This article is too long to fit in an email. Continue reading the rest of Chapter 27 (downstream resiliency) and Chapter 28 (upstream resiliency) online.

To dive deeper into distributed systems, get the book: