Inside Uber’s move to the Cloud: Part 1

Uber has operated its own data centers for 9 years. What challenges did the company face, and why is it considering moving to the Cloud? Part 1.

👋 Hi, this is Gergely with the monthly free issue of the Pragmatic Engineer Newsletter. In every issue, I cover challenges at Big Tech and high-growth startups through the lens of engineering managers and senior engineers.

If you’re not a subscriber, you missed the issue on Backstage: an open source developer portal and a few others. Subscribe to get two full issues every week. Many subscribers expense this newsletter to their learning and development budget. If you have such a budget, here’s an email you could send to your manager.👇

Uber has been one of the few Big Tech companies operating its own data centers, resisting the temptation to shift most of its compute and data storage capacity to the Cloud, for many years. During my time there in 2016-2020, there was some usage of AWS and GCP and engineers say this usage has only grown since, although Uber continued investing heavily in its own data centers.

But on 13 February, Google Cloud CEO, Thomas Kurian, publicly confirmed the signing of a long-term contract with Uber to move to the Cloud, following reporting by The Wall Street Journal.

So, what happened and why? I’ve talked with infrastructure engineers at Uber to get a clear picture of the context that led to this.

I’ve learned a lot of details not shared publicly until now – too many to fit into one newsletter issue. Today, we dive into how Uber built their data centers, the challenges faced, and the increasing pressure to consider the Cloud. In the next issue, we’ll dig into what Uber’s move to the Cloud means.

In today’s article, we cover:

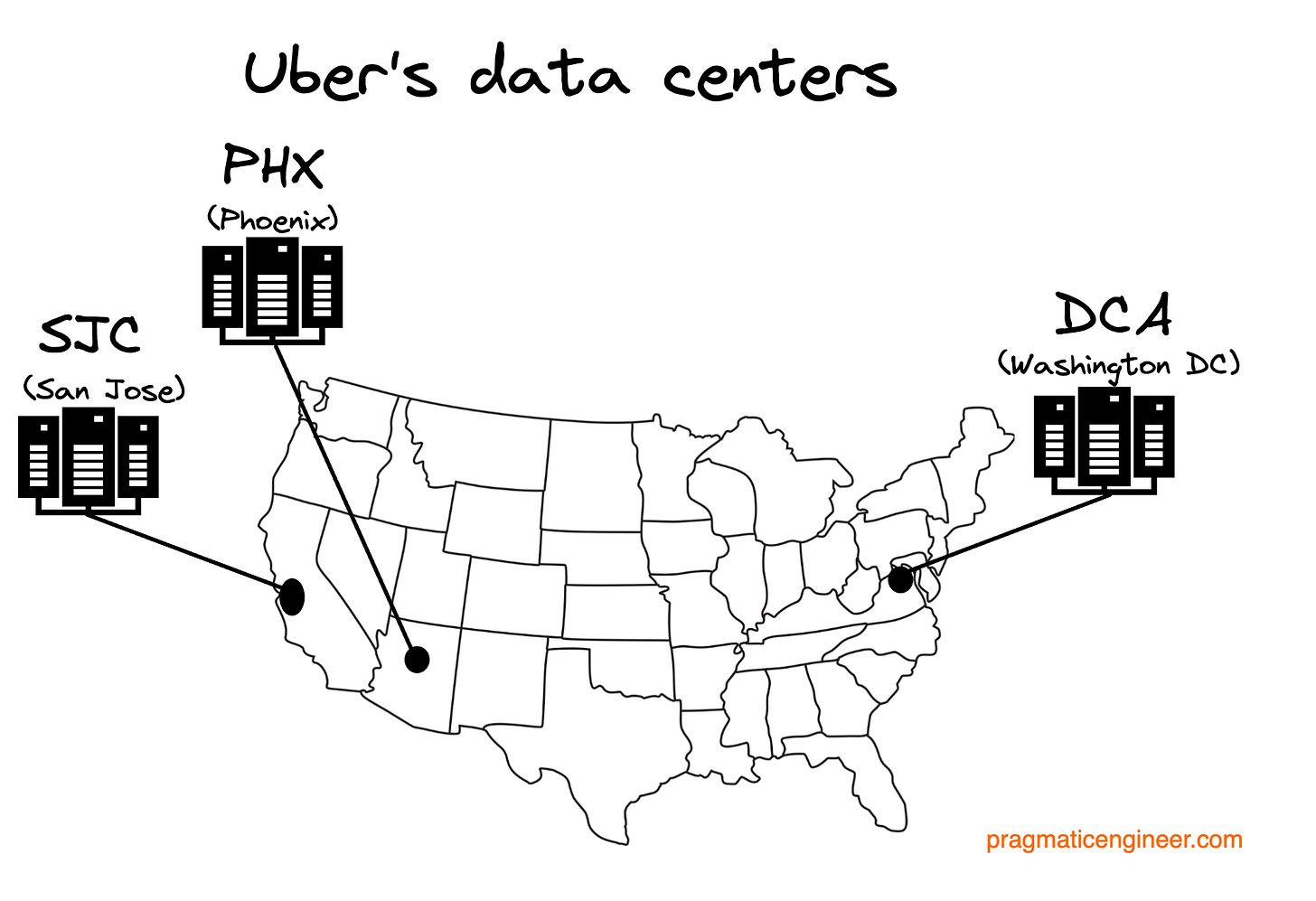

The history of Uber’s data centers. How did the SJC, DCA and PHX data centers come to be, and why was SJC decommissioned?

Challenges of operating your own data centers. Hard drives, ODM woes, and the automation of data center maintenance.

The push to the Cloud. Incentives and pull factors from Covid-19, the Postmates acquisition, and CapEx and OpEx costs.

Cloud basics. A primer on data centers, regions, and availability zones. What do these mean for public Cloud providers and businesses like Uber?

Part 2 of the series additionally covers:

The groundwork of moving to the Cloud. Uber’s hybrid Cloud approach and why Crane – a 5-year project to support Cloud migrations – was key. Read more about Crane.

Is the Cloud necessarily cheaper? Spoiler: “it depends.”

IaaS, Paas, and SaaS. And why these differences matter when talking about “the Cloud.”

What does Uber moving to the Cloud mean? No: Uber won’t be fully on the Cloud. A nuanced look at what this may mean for IaaS, PaaS and SaaS services.

Things can go wrong when operating your data centers. Power outages, flapping NICs, wildfires, and more.

Learnings about running your data centers. Engineers who built Uber’s data centers share useful learnings, relevant beyond Uber.

Don’t forget that there is no “one size fits all” approach when it comes to cloud strategy. As proof to this, see the article Inside Agoda’s Private Cloud, which is a case study on travel tech giant Agoda not utilizing the public cloud at all, even as the company employs 1,600 software engineers, sees 7.5M queries/second and stores over 30PB of data. Agoda continuously monitors whether moving to the cloud would make economic sense — and, so far, it would not, at least not currently. Read more on Agoda’s cloud strategy.

1. The history of Uber’s data centers

The first two data centers. Uber was founded in 2010 and started to grow quickly. shortly after. The company did a beta launch in 2010 and launched publicly in San Francisco in 2011.

From around 2013, Uber outsourced operating their infrastructure to a now-defunct company called Peak Hosting, a California-based hosting provider. Talking with a Peak Hosting employee at the time, this person shared:

“Peak was predicated on this idea that the cloud sucked and bare metal was the only way to go. Uber outsourced the datacenter (hardware+network) side to Peak, as well as a fair amount of the OS-level system build and management side of things. Peak’s tagline, at the time was : ‘everything but your code’. Back then, Uber's entire infra was running on about a dozen Dell PowerEdge servers.”

2014 was a big year for Uber, engineering-wise, for two reasons. First, this was the year the company made the program/platform split, which we covered in-depth in The platform and program split at Uber. From that article:

“In the spring of 2014, Uber’s Chief Product Officer, Jeff Holden, sent an email to the tech team. The changes outlined in this email would change how engineering operated and shape the culture for years to come. (...)

It had only been three years since Uber made its first full-time engineering hire, and there were already more than 100 engineers, 10 Product Managers (PM), and 15 designers at the company by this time.”

2014 was also the year when Uber began on the path of building its own data center. Uber started to move off from Peak Hosting, as the provider could not handle the velocity of changes Uber needed in terms of deployments and configurations on top of the hardware level. Talking with a tenured engineer at Uber, it was then that Uber’s first data center in San Jose – called SJC, internally – was built, and teams migrated services over to it.

Following SJC, a second data center was built near Washington DC, known as DCA. For each data center, additional zones - isolated areas - were added, which were named by a number at the end of the data center’s acronym; SJC1 was Zone 1 within SJC, DCA1 was Zone 1 within DCA, etc.

Back then, building a private data center made a lot of sense for Uber. Cloud computing services were not nearly as sophisticated as today, and Uber was growing rapidly and raising funding equally fast. The company was also expanding into new verticals like UberEats, UberRush, UberAir and more. Owning and operating its own data centers was as much a strategic choice as that which Google or Facebook made for their businesses. Critically, Uber had the capital to invest in the upfront costs of its new data centers.

Failovers, multizone and building a third data center. Uber’s architecture evolved with the infra setup. The company operates thousands of microservices, with each team responsible for ensuring they run reliably. Since Uber had two data centers for a very long time, most services operated on the assumption that at least one would be healthy, and operated from the ‘preferred’ data center. The services would test for a failover, simulating one data center going down, and spinning up in another data center.

When I worked at Uber, we regularly ran failover tests, usually around major traffic events. My team’s services lived on the DCA1 data center, so we did failovers to SJC1. However, for a long time, most teams ran services out of a single data center.

The push for most critical services to become multi-zone and multi-region started gaining momentum around 2017, as more zones were added to each data center. A service being multi-zone meant it ran nodes across multiple zones within the same data center, allowing zones to be scaled up or down and for load to be balanced across zones. Multi-region meant doing this across geographical regions. Adding multi-zone, multi-region support to services with no previous knowledge of zones or regions was a considerable engineering task.

Around 2019, Uber started to build a third data center in Phoenix, Arizona, called PHX. The reason for building this data center? SJC was too small for Uber’s requirements, could not be extended and also had reliability issues. Infra leadership decided the best approach was to develop a new data center and to decommission SJC when it went online.

2. Challenges of operating your own data centers

For any company operating its own data centers, hardware challenges are common and Uber was no different.

Hard drives, a regular issue. Like many other companies which acquire high volumes of hardware, Uber chose to buy cheaper solid-state drivers (SSDs) designed to withstand less intensive usage than costlier, enterprise-ready versions. Some of the SSDs wore out within a year because they had low DWPD (Drive Writes Per Day) and in high-write scenarios – such as serving write-intensive databases – they didn’t last long. But they were cheap.

As Uber began investing heavily in its own data centers, it switched from OEM to ODM hardware around 2018-19. OEM stands for “original equipment manufacturer,” which means hardware is acquired directly from suppliers like Dell, HP, Lenovo. When a company is purchasing potentially hundreds of thousands of hard drives and physical machines, OEM becomes costly as manufacturers add their own margin.

Is there a cheaper way to do it without compromising on quality? There is: cut out the manufacturer by going to the factory yourself. This approach is called ODM, original design manufacturing. ODM vendors include Quanta, Wiwynn and Foxconn.

ODM typically involves going to manufacturers in countries like Taiwan or China. The benefit is clear: economies of scale. The biggest issue with ODM? Quality issues take more time to iron out, but once they are this option is cheaper and capacity can often be planned better.

ODM is the approach Meta, Google, Oracle and Microsoft all follow, having relationships with manufacturers. They staff their ODM operations with a hundred or more in-house engineers for planning, running the process and quality assurance.

For example, at AWS, the company architects and defines the products, and then lets the ODM design them. According to engineers I talked with, this is how their ARM-based Graviton processor, Nitro virtualization platform, network switches, racks, cooling design, generator firmware and more are architected.

A major distinction between Uber’s ODM operation and Facebook’s and cloud providers’, was staffing. Uber attempted to run its ODM process with fewer people because the volumes produced were lower than elsewhere. However with ODM, volume doesn’t much matter; you need similar levels of expertise and workforce to manage an operation manufacturing 100,000 ODM units, or 1 million of them.

When designing custom hardware, hardware engineers need to be involved in the planning phase, and then to test and participate in quality assurance. ODMs usually deliver BIOS and firmware, from help from third-party providers like AMI. Places like Google employ specialized firmware engineers who sometimes design and always test custom firmwares for ODM hardware. However, Uber’s hardware team had no such firmware division.

As typical when switching from OEM to ODM hardware, Uber’s hardware team regularly discovered hardware flaws, mostly derived from the business using hardware designed specifically for itself. Sometimes, bugs were caught only when the hardware was in mass production, making them widespread and the cause of outages.

With OEM, the benefit is that there’s a good chance that a hardware issue was discovered at another customer, and the fix is being worked on. OEM vendors also have more resources than Uber for detecting flaws before mass production begins.

Meanwhile, companies like Oracle have deep expertise in server manufacturing. It acquired Sun Microsystems, which was known as a high-quality computer / server hardware manufacturer. This brought in house the expertise and resources for Oracle to provide high-quality servers and cloud services.

Other key areas when operating your own data center are automated hardware operations and hardware maintenance. Most serious cloud providers have automation in place to make this more reliable, efficient and better value, long term.

Uber built plenty of automation tools for its data centers, especially on the software side. For example, the company automated provisioning of machines, and database operations were more or less handled automatically.

However, the data center automation for maintaining hardware was nothing like the near-total automation some cloud providers do. This means maintenance involves lots of manual work, which is expensive in staffing and the time it takes to complete tasks like replacing faulty or worn-out units. At the same time, while Uber lags on automation, I gather it’s still ahead of some tech companies.

In all fairness, the question: “How much should we invest in automating our data centers?” is awfully similar to the software engineering question: “How much should we invest in automating testing of our code?” The pragmatic approach is to start with manual, at first, and add automation when the payoff is clear.

3. The push to the Cloud

Since Dara Khosrowshahi became CEO of Uber, I’m told utilizing the Cloud more is a regular topic. His former workplace Expedia uses the Cloud extensively, so it’d be an obvious point for him to raise.

Covid-19 created some “waste” in Uber’s data centers, which might have been avoided on the Cloud. When the pandemic hit, Uber’s core activity of ride sharing nosedived as societies entered lockdowns, rendering its business model obsolete. Revenue fell off a cliff as demand for Uber’s services reduced by orders of magnitude.

The one thing that was largely unchanged was the cost of operating its data centers. Suddenly, Uber had lots of unused excess capacity for which energy bills were still due, maintenance work needed to be done, and hardware that continued to wear out.

In what was lucky for Uber: while the pandemic meant the demand for Rides plummeted, Eats saw a surge in popularity. In this sense, Uber’s infrastructure utilization bounced back thanks to Eats scaling up, as Rides scaled down.

Given how unexpected Covid-19 was, would using a Cloud provider have been different? In most cases, not so much. Long-term contracts with Cloud providers involve reserving capacity for a period of years, and it’s usually very hard to “return” capacity as the Cloud provider would take on the bills, maintenance, and replacing of old hardware.

However, Covid-19 created a new precedent in the form of an online retail boom. While Uber experienced weakened demand for Rides, the e-commerce sector suddenly needed far more capacity to serve the influx of shoppers online. In this unprecedented situation, perhaps a Cloud provider could’ve processed a “return” by Uber, and resold the capacity.

In a neat move, AWS built the EC2 Reserved Instance Marketplace, where customers can advertise reserved instances for which 1 or 3 years have been paid in advance, but are no longer needed. Buyers get cheaper reserved instances and sellers don’t lose all their investment. At the time of publication, I’m unaware of other Cloud providers offering this.

CapEx vs OpEx differences and the chip shortage. As engineers, it’d be nice if we could ignore the financial side of our work, but with data centers it’s not possible. The long and short of it is that services from a Cloud provider are paid for in regular, predictable installments across quarters and years. In contrast, when investing in a data center, costs can suddenly spike and even create cash flow issues, leading to borrowing.

The more detailed version is that there’s a cost called CAPEX (Capital Expenditure.) This is money spent on buying, upgrading and maintaining physical assets. For example, if a company buys a server for $12,000, and its lifespan is 4 years, then the company needs to pay $12,000 now. But in its financial results, it can expense the server at $3,000 per annum across 4 years.

OpEx stands for Operating Expense. Say that the same company, instead of buying a server, leases one from an IaaS provider, paying $3,500 annually. Every year, the company pays $3,500. The big difference? In the case of buying the server outright, the company needs to both pay $12,000 as a one-off, and can only write down $3,000 annually. This results in a $3,000 loss, on paper, for the first year, with a $9,000 residual value left. If the company doesn’t have $12,000 to buy the server, it needs a loan with interest on top.

In the case of leasing servers, the company expenses $3,500 every year. This is easy to plan and account for, and puts less pressure on cash flow. In the case of publicly traded companies, investors aren’t shocked when OpEx spending appears in quarterly results. Additionally, in the case of leasing a server, its operating costs are included; but not when purchasing one.

In the case of Uber, the chip shortage of 2020-22 created situations where hardware purchases scheduled for certain months were significantly delayed. Not only did this impact the data centers’ capacity, it also impacted financials and cashflow.

By relying on the Cloud, more of Uber’s spending would go to OpEx instead of CapEx, and so impact less cash flow and the bottom line.

Uber acquired a number of companies, none of which ran their own data centers. One acquisition was particularly interesting: Postmates, a business acquired in 2020 for $2.65B. Uber’s leadership noticed Postmates’ infrastructure costs were significantly lower as a percentage than Uber spent on its infrastructure.

It was understood that Postmates – which was built on top of AWS – had less complex use cases than Uber. For example, it ran from a one availability zone in a single region, compared to Uber with services spread across two regions and around 10 zones. Still, Postmates’ relatively low infrastructure cost one more signal for Uber to investigate.

Running on a single zone carries the risk of outages being catastrophic. AWS’s US-East-1 region has had several outages that disrupted services for hours and took down a good part of the internet. The impact was global because some worldwide AWS services – like IAM (Identity and Access Management) – only run from this region. For example, on 7 December 2021, Amazon’s US-East-1 region was disrupted for several hours due an automated activity to scale capacity across the data center, which then caused network congestion across the region. What end users saw was Netflix, Disney+ and Delta airlines services stop working properly, and also the likes of Alexa and Ring security cameras.

Over the years, Uber’s engineering leadership has looked closely at ways to move to the Cloud, over the years. I’m told the engineering team leadership ran the numbers on what such a move would cost and save, and a full move wasn’t feasible at the time.

The software layer. Although talked less about, outside of hardware considerations, there are software ones as well.

For example, Uber used an in-house storage platform called Schemaless. Back around 2014, when Schemaless was built, it made sense for Uber to invest in a custom storage solution. However, as some projects within Uber discovered: Uber’s Schemaless started to fall behind in capabilities for what Google offered. This was a reason that some teams started to onboard to Google Spanner: could give them the data consistency and scale that Uber in-house storage platform can not.

Some Uber teams, more recently started using Google Looker and Google Data Studio for report visualization. Some teams within Uber put a lot of effort to build reporting internally - on top of Uber’s Dashbuilder stack - but Google’s tools were just more feature rich.

Deciding to stay fully on-prem means that you need to build and maintain additional software layers and tools - and even if you invest a lot of time and effort, chances are those tools will not be on-par with the commercial AWS, Google Cloud, Azure and other public Cloud commercial offerings.

4. Cloud basics

Before tackling this topic, let’s cover terminology as it can mean different things at different Cloud providers.

Data center: a physical location housing a group of networked computer servers. “Location” means different things at Cloud providers and companies with their own data centers:

A room. For smaller companies building their own data center, a location can start out as just a room in a building. The more machines are in this room, the more likely temperature management becomes a problem.

A building. Any reasonably-sized data center occupies an entire building for the exclusive purpose of hosting and operating networked computers. Most companies with their own data centers use an entire building, often designed with activities like automated maintenance in mind. This is the case for Azure, which specifies “Azure datacenters are unique physical buildings—located all over the globe—that house a group of networked computer servers.”

A facility with several buildings. Larger data centers can span several buildings within a location.

For data centers, there are several considerations:

Physical security. How easy is it to access the data center? What security measures are in place to keep it safe?

Infrastructure. Data centers need to be designed to be resilient in operation. Back-up power infrastructure is usually a given, as are heating, ventilation and air conditioning systems (HVAC,) and fire suppression. Redundancy in networking and internet connections is also a must.

Sustainability. Data centers consume large amounts of energy. When building a new one, there are opportunities to ensure some or most of the energy supply comes from renewable sources. For example, at the time of publication, both Google Cloud and Oracle Cloud run on 100% renewable energy, while AWS and Azure have committed to run on 100% renewable energy by 2025. Google is committed to use wholly carbon-free energy by 2030, Azure has committed to be zero-waste and water-positive (to replenish more water than data centers consume) by 2030.

Public Cloud providers disclose details on the physical security, infrastructure and sustainability of their data centers. As public Cloud providers serve many customers at a large scale, they have more incentives – and resources! – to invest in these areas than an in-house data center does.

Availability zone (AZ): This is an isolated data center, in theory. If an AZ has issues like a power outage, networking problems, or even a fire, then other AZs continue operating normally. Two AZs can be guaranteed to not be affected by the same potentially catastrophic event.

This is where Uber and dedicated Cloud providers interpret the concept differently:

Uber: Refers to “zones” as physically separated spaces within the same data center. These are basically separate rooms within the same data center with separate power supply and HVAC equipment. Uber, naturally, had limits on physical location, with only two data centers. However, creating zones within these data centers was sensible, as it gives additional separation and more importantly allows for a gradual move to the Cloud and reduction of data center space.

AWS: An AZ consists of one or more data centers, each with separate power, networking and connectivity, and redundancies for all of these. Two AZs are at least 30 miles from one another, ensuring physical separation, so even extreme natural events like weather or earthquakes shouldn’t disrupt more than one of them. They’re connected by fast, fiber-optic networking, enabling rapid failovers.

Azure: An AZ is “physically and logically separated datacenters with their own independent power source, network, and cooling.” A difference between AWS and Azure are how Azure originally did not have the concept of AZs, introducing them in 2017. To date, not all Azure regions have availability zones.

Google Cloud: A zone is “a deployment area for Google Cloud resources within a region. Zones should be considered a single failure domain within a region.” In what is interesting, Google notes how they might not operate all their data centers, saying: “data centers may be owned by Google and listed on the Google Cloud locations page, or they may be leased from third-party data center providers.”

Until 2017, a major difference between AWS and Google Cloud was how Azure only supported regions Since then, Azure has been launching a growing number of AZs across their regions.

At the same time, Uber operates AZs in the same building with physical separation from each other.

Region: there’s differences in what this means across providers, and at Uber:

Uber: a region is effectively a data center, given the company has two in different locations: Phoenix and Washington DC.

Azure: a region is a set of data centers connected via a regional low-latency network.

Google: a geographical location with at least 3 availability zones.

AWS: a geographical location with at least 3 availability zones, connected by a low-latency fiber network. AZs are usually located 30-60 miles apart.

I talked with an AWS engineer who’s of the opinion that Azure, by calling an “region” what AWS calls an “availability zone”, is craftily implying Azure has far more regions than AWS. The language used to describe availability zones undoubtedly differs between Cloud providers, so do your research on what your provider means by it, and how important the distinction is for your use cases.

Network edge location: a data center designed to deliver services with the lowest possible latency. These data centers are physically close to large groups of users, usually major cities. Content delivery networks (CDNs) mostly consist of a large number of network edge locations and direct connections to other networks, like internet service providers and cloud providers.

While Cloud providers tend to provide network edge locations – for example, AWS has more than 400, Azure more than 100 and Google Cloud also more than 100 – there are dedicated CDN and edge providers offering much higher coverage and sometimes more sophisticated features.

We touched previously on Edge computing in the interview with Malte Ubl, CTO at Vercel, who said in July:

“‘One of the most exciting things in tech is the move to Edge computing. Vercel, Cloudflare and other companies give the ability for clients to run code; not on the client, but very close to the client — therefore on the Edge. This area is very exciting.

‘When folks first started to think about this, everyone thought it would be great. However, when you look a little closer, you realize there’s lots of complexity. Deployed to the Edge, your code could be running 20 to 50 milliseconds away from the user. That might be a lot better than if it ran on the US East data center which is 150 milliseconds away.”

When it comes to Edge Network providers – often called Content Delivery Network (CDN) providers – the leaders in the market are:

Cloudflare: the provider market leader for CDNs, with roughly 75% market share as of January 2023, according to W3Techs. Cloudflare has data centers in 285 cities and 11,500 networks (ISPs, Cloud providers, enterprises) connected. Data centers are strategically placed to reach 95% of the world’s population within 50 milliseconds (ms.)

Akamai: a popular choice, especially for larger tech companies. The company boasts of having more than 4,200 locations, 1,400 networks in 135 countries, and claims to be the world’s largest Edge platform.

Fastly: another popular choice. The company has data centers in 78 cities, and a strength of 150ms mean global purge time for clearing cached content – likely the lowest major providers. In 2022, Cloudflare published details on how it does cache purging and published a p50 global purge time of 1.31 seconds.

Other providers include Stackpath, Gcore, CacheFly, Edgio.

It usually makes little sense for a business to build its own edge network and Uber has not, instead utilizing CDN providers or network edge locations within Cloud providers.

There are a handful of companies for which investing in their own Edge network once made sense, including Netflix and Facebook. Netflix built Open Connect, a globally distributed CDN, while Facebook also keeps growing its Edge network with both Points of Presence networking installations and co-located local caches.

Takeaways

What are some takeaways from Uber’s time operating its own data center? As a former Uber engineer and manager, with direct experience of data center issues, I can confirm running your own data centers is very hard, even with great people.

On the other hand, we engineers learned plenty from Uber’s less conventional approach. Thanks to the open engineering culture, we had front row seats for the creation of zones in a DC and migrations to them, the building of a new data center, and the challenge of creating multi-zone, multi-region services.

For me, Uber’s move to the Cloud illustrates there’s an increasingly small number of companies for whom it’s worth operating large data centers and achieving better returns by manufacturing hardware via an ODC, instead of buying it from an OEM.

Uber built its data centers in 2014 at a time when there were fewer Cloud providers and capabilities were more limited. Although Uber has contracted with Google Cloud and Oracle, back in 2014 neither vendor had offerings Uber could have taken advantage of. Then, Google Compute Engine had been Generally Available (GA) for only a year, and Oracle Cloud’s initial public release was still two years off.

So what’s changed since then, what kind of ground work did Uber do over the course of 5 years to enable its move to public Clouds, and what will Uber’s move mean in practice? We’ll dive into these questions in Part 2 of this series, coming this Thursday (9 March.)

Updates to the article:

9 March: added details of Uber’s first, outsourced data center with Peak Hosting, and details on the software layer.

10 March: added details on how Uber’s Eats business took up some of the freed up capacity when Covid-19 started, and details on software automation for Uber’s data centers.

15 March: corrected the detail on Azure’s AZs, and added details on Google Cloud’s zones. Thanks to Ravneet Shah and Jonathan Shaw at Allica Bank for flagging the inaccuracy on Azure’s availability zones!

Thank you to @Metallurgist and the several other software engineers who gave early feedback on this article.

Hire With The Pragmatic Engineer Talent Collective

If you’re hiring, join The Pragmatic Engineer Talent Collective. It’s the #1 talent collective for software engineers and engineering managers. Get weekly drops of outstanding software engineers and engineering leaders open to new opportunities. I vet every software engineer and manager - and add a note on why they are a standout profile.

Companies like Linear use this collective to hire better, and faster. Here’s what they said:

“The candidate response rate has been standout for us, even for very senior engineers. The fact that candidates are vetted personally by Gergely helps build trust, even before the first conversation.”

Read more testimonials from hiring managers. And if you’re hiring, apply here to join:

If you’re open for a new opportunity, join to get reachouts from vetted companies. You can join anonymously, and leave anytime:

"carries the risk of outrages being catastrophic" -> s/outrages/outages/

I’d be curious to know why GCP and Oracle are selected as the cloud vendors for Uber