Shipping to Production

Approaches for shipping code to production reliably, every time.

👋 Hi, this is Gergely with a subscriber-only issue of the Pragmatic Engineer Newsletter. If you’re not a full subscriber yet, you missed the second part of Inside Facebook’s engineering culture and the one The tech boom potentially being over. Subscribe to get this newsletter every week 👇

Q: As an engineer at a fast-growing startup, I’d like to learn more about how small and large companies ship code to production. Are there any ‘best practices’ worth following, or does everyone come up with their own approach?

Great question. How you ship your code to production in a way that is fast and reliable, is a question more engineers and engineering leaders should educate themselves on. The teams and companies that can do both – ship quickly / frequently and with good quality – have a big advantage over competitors who struggle with either constraint.

In this issue we cover:

The extremes of shipping to production.

Typical processes at different types of companies.

Principles and tools for shipping to production responsibly.

Additional verification layers and advanced tools.

Taking pragmatic risks to move faster.

Deciding which approach to take.

Other things to incorporate into the deployment process.

If you’re not yet a subscriber, you can read a shorter version of this article on The Pragmatic Engineer Blog: Shipping to Production.

1. The Extremes of Shipping to Production

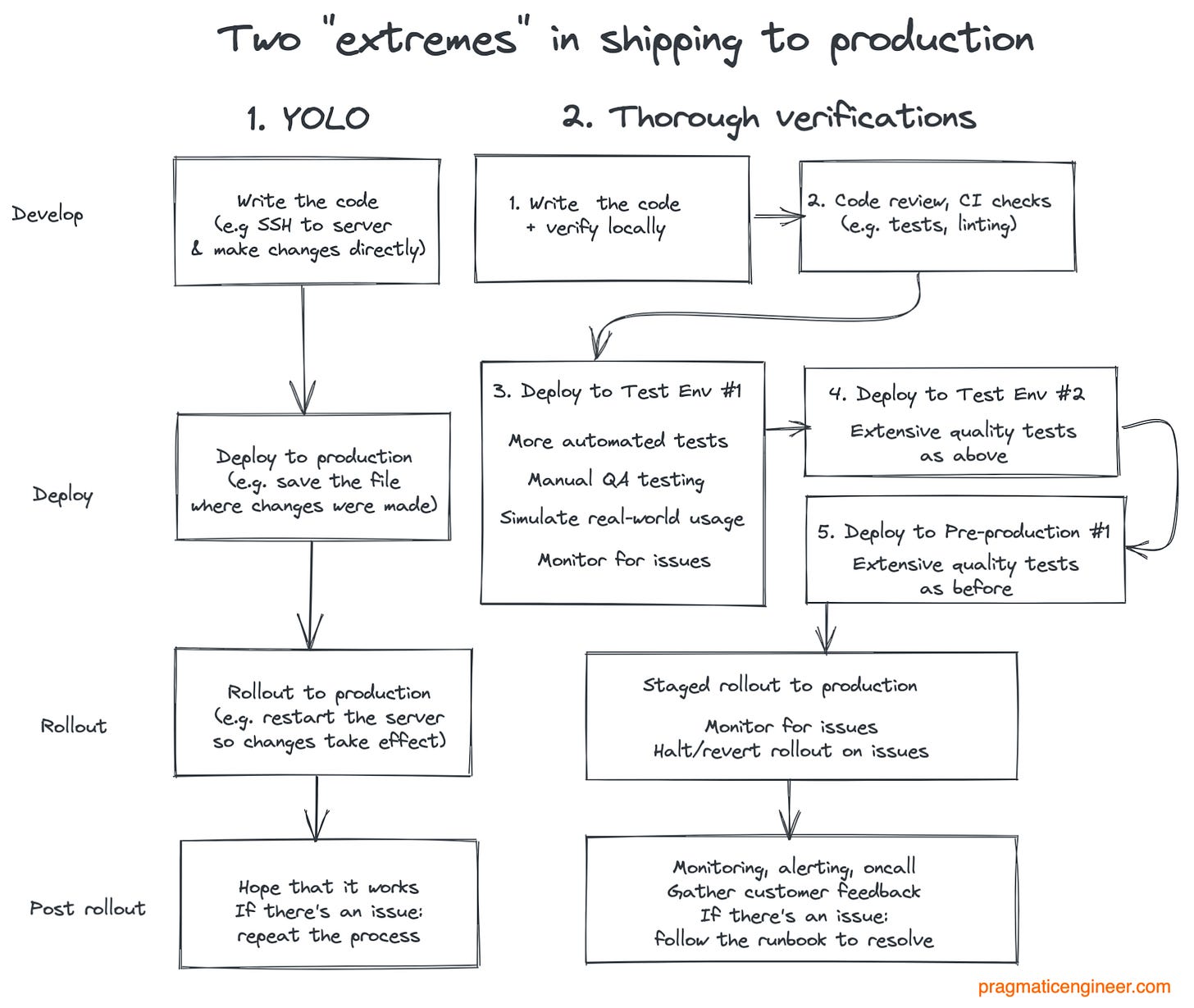

When it comes to shipping code, it’s good to understand the two extremes of how code can make it to users. The table below shows the ‘thorough’ way and the ‘YOLO’ way.

1. YOLO shipping - YOLO stands for “you only live once.” This is the approach many prototypes, side projects, and unstable products like alpha or beta versions of products, use. In some cases, it’s also how urgent changes make it into production.

The idea is simple: make the change in production, then see if it works – all in production. Examples of YOLO shipping include:

SSH into a production server → open an editor (e.g. vim) → make a change in a file → save the file and / or restart the server → see if the change worked.

Make a change to a source code file → force land this change without a code review → push a new deployment of a service.

Log on to the production database → execute a production query fixing a data issue (e.g. modifying records which have issues) → hope this change has fixed the problem.

YOLO shipping is as fast as it gets in terms of shipping a change to production. However, it also has the highest likelihood of introducing new issues into production, as this type of shipping has no safety nets in place. With products that have little to no production users, the damage done by introducing bugs into production can be low, and so this approach is justifiable.

YOLO releases are common with:

Side projects.

Early-stage startups with no customers.

Mid-sized companies with poor engineering practices.

When resolving urgent incidents at places without well-defined incident handling practices.

As a software product grows and more customers rely on it, code changes need to go through extra validation before reaching production. Let’s go to the other extreme: a team obsessed with doing everything possible to ship close to zero bugs into production.

2. Thorough verification through multiple stages is where a mature product with many valuable customers tends to end up, when a single bug may cause major problems. For example, if bugs result in customers losing money, or customers leaving for a competitor, this rigorous approach might be used.

With this approach, several verification layers are in place, with the goal of simulating the real-world environment with ever more accuracy. Some layers might be:

Local validation. Tooling for software engineers to catch obvious issues.

CI validation. Automated tests like unit tests and linting running on every pull request.

Automation before deploying to a test environment. More expensive tests such as integration tests or end-to-end tests run before deployment to the next environment.

Test environment #1. More automated testing, like smoke tests. Quality assurance engineers might manually exercise the product, running both manual tests and doing exploratory testing.

Test environment #2. An environment where a subset of real users – such as internal company users or paid beta testers – exercise the product. The environment is coupled with monitoring and upon a sign of regression, the rollout is halted.

Pre-production environment #3. An environment where the final set of validations are run. This often means running another set of automated and manual tests.

Staged rollout. A small subset of users get the changes, and the team monitors for key metrics to remain healthy and checks customer feedback. The staged rollout strategy is orchestrated based on the riskiness of the change being made.

Full rollout. As the staged rollout increases, at one point, changes are pushed to all customers.

Post-rollout. Issues will come up in production, and the team has monitoring and alerting set up, and a feedback loop with customers. If an issue arises, it is dealt with by following a standard process. Once an outage is resolved, the team follows incident review and postmortem best practices, which we’ve covered before.

Such a heavyweight release process can be seen at places like:

Highly regulated industries, such as healthcare.

Telecommunications providers, where it is not uncommon to have ~6 months of thorough testing of changes, before shipping major changes to customers.

Banks, where bugs could result in significant financial losses.

Traditional companies with legacy codebases that have little automated testing. However, these companies want to keep the quality high and are happy slowing down releases by adding more verification stages.

2. Typical processes at different types of companies

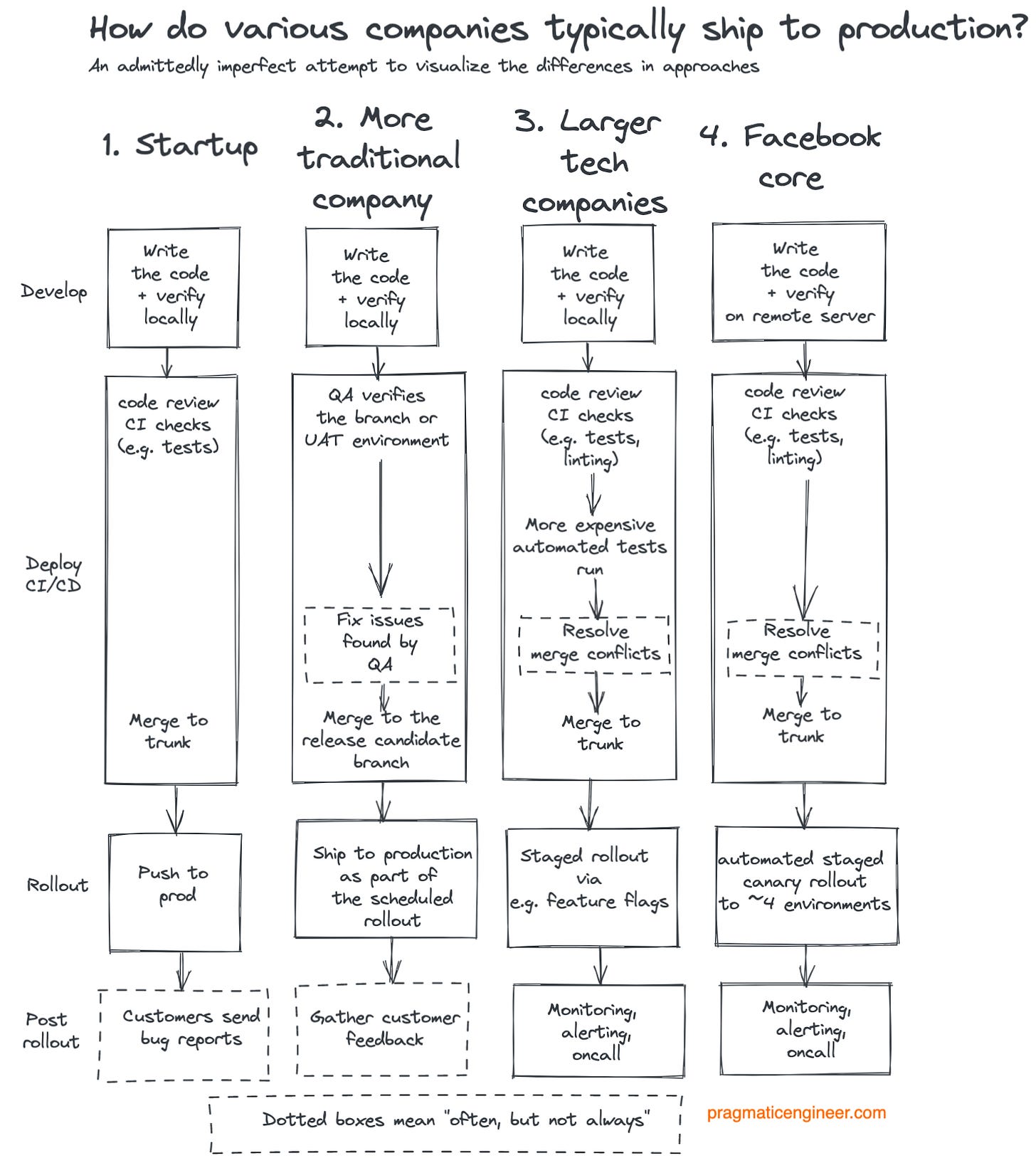

What are “typical” ways to ship code into production at different types of companies? Here’s my attempt to generalize these approaches, based on observation. The below is a generalization, as not every company’s practices will match these processes. Still, I hope it illustrates some differences in how different companies approach shipping to production:

Notes on this diagram:

1. Startups: Typically do fewer quality checks than other companies.

Startups tend to prioritize moving fast and iterating quickly, and often do so without much of a safety net. This makes perfect sense if they don't – yet – have customers. As the company attracts users, these teams need to start to find ways to not cause regressions or ship bugs. They then have the choice of going down one of two paths: hire QAs or invest in automation.

2. Traditional companies: Tend to rely more heavily on QAs teams.

While automation is sometimes present in more traditional companies, it's very typical that they rely on large QA teams to verify what they build. Working on branches is also common; it's rare to have trunk-based development in these environments.

Code mostly gets pushed into production on a schedule, for example weekly or even less frequently, after the QA team has verified functionality.

Staging and UAT (User Acceptance Testing) environments are more common, as are larger, batched changes shipped between environments. Sign-offs from either the QA team or the product manager – or project manager – are often required to progress the release from one stage to the next.

3. Large tech companies: Typically invest heavily in infrastructure and automation related to shipping with confidence.

These investments often include automated tests running quickly and delivering rapid feedback, canarying, feature flags and staged rollouts.

These companies aim to keep a high quality bar, but also to ship immediately when quality checks are complete, working on trunk. Tooling to deal with merge conflicts becomes important, given some of these companies can see over 100 changes on trunk per day.

4. Facebook core: Has a sophisticated and effective approach few other companies possess.

Facebook's core product is an interesting one. It has fewer automated tests than many would assume, but, on the other hand, it has an exceptional automated canarying functionality, where the code is rolled out through 4 environments: from a testing environment with automation, through one that all employees use, through a test market of a smaller region, to all users. In every stage, if the metrics are off, the rollout automatically halts.

From the article Inside Facebook’s engineering culture, in which a former Facebook employee relates how an Ads issue was caught before causing an outage:

“I was working in Ads and I remember this particular bad deploy which would have made a massive financial loss to the company. The code change broke a specific ad type.

‘This issue was caught in stage 2 out of 4 stages. Each stage had so many health metrics monitored that if something went wrong, these metrics would almost always trigger - like this first safety net had done.

“All Facebook employees used the before-production builds of the Facebook website and apps. This means that Facebook has a massive testing group of ~75,000 people testing their products before they go into production, and flagging any degradations.”

3. Principles and tools for responsibly shipping to production

What are principles and approaches worth following if you are to ship changes to production in a responsible manner? Here are my thoughts. Note that I am not saying you need to follow all the ideas below. But it’s a good exercise to question why you should not implement any of these steps: