AI Tooling for Software Engineers: Reality Check (Part 2)

How do software engineers using AI tools view their impact at work? We sidestep the hype to find out how these cutting-edge tools really perform, from the people using them daily.

In mid 2024, GenAI tools powered by large language models (LLMs) are widely used by software engineering professionals, and there’s no shortage of hype about how capable these tools will eventually become. But what about today? We asked the software engineers who use them.

Our survey on this topic was filled out by 216 tech professionals; and we then analyzed this input to offer a balanced, pragmatic, and detailed view of where LLM-powered development tooling stands, right now.

In Part 1 of this mini series we covered:

Survey overview

Popular software engineering AI tools

AI-assisted software engineering workflows

The good

The bad

What’s changed since last year?

In this article, we cover:

What are AI tools similar to? AI is akin to autocomplete, or pairing with a junior developer, or a tutor/coach, say survey responses.

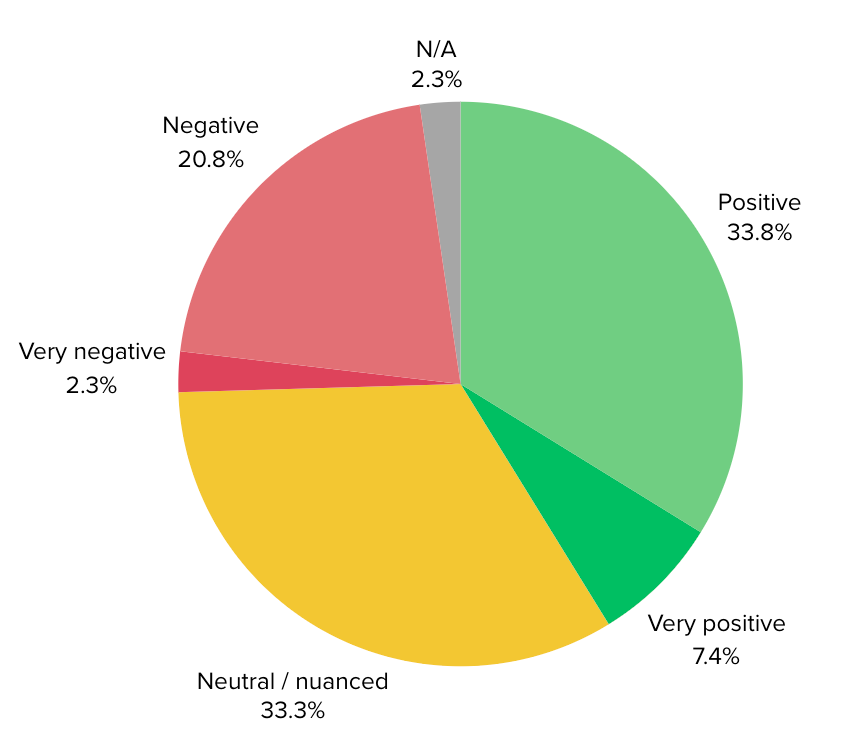

State of AI tooling in 2024: opinions. There’s a roughly equal split between positive, neutral, and negative views on how useful AI tooling is for software development.

Critiques of AI tools. Many respondents are unimpressed by productivity gains, concerns exist about copied-pasted output, and other criticisms.

Changing views about AI tooling over time. After using the tools for 6 months or longer, most developers are either more positive, or slightly less enthusiastic than before. A minority feel more negatively.

Which tasks can AI already replace? Simple, constrained problems, and “junior” work and testing are tasks the tools can handle today. Plenty of engineers believe AI tools won’t fully replace any software engineering role or responsibility.

Time saved – and what it’s used for. Some respondents save almost no time, while others say AI tools create 20% more time for other things.

In Part 3 we wrap up this mini-series with:

AI usage guidelines

Internal LLMs at Meta, Microsoft, Netflix, Pinterest, Stripe

Reservations and concerns

Advice for devs to get started with AI tools

Advice for engineering leaders to roll out AI tooling, org-wide

Measuring the impact of GenAI tools

AI strategies at tech companies

1. What are AI tools similar to?

In the survey, we asked professionals for their views on GenAI tooling, and several described it in metaphorical terms. Here’s a selection of answers to the question: “AI tooling is like __:”

… autocomplete. “As an addition to enhance developer productivity, it will become somewhat "invisible" and something we take for granted, like code auto-completion or grammar hints.” – CTO, 18 years of experience (YOE)

… pairing with a junior programmer. “Copilot is like pair programming with a junior programmer. I use it to do the rote work of filling in the details of my larger design.” – principal engineer, 45 YOE

… an eager intern. “It’s like having a really eager, fast intern as an assistant, who’s really good at looking stuff up, but has no wisdom or awareness. I used to think it would improve to quickly become a real assistant, but the usefulness of the tools haven’t meaningfully improved in the past year, so our current LLM approach feels like it might be petering out until we get a new generation.” – director, 15 YOE

… a tutor or coach. “It’s great as a tutor or coach, answering very specific questions quickly and painlessly. It gets you started or unblocked really well.” – distinguished engineer, 25 YOE

… an assistant. “For me it's more of an assistant which can help me in day-to-day tasks.” – architect, 9 YOE

… pre-Google search. “It is like when we didn't know which search engine was best and we had to multihome and try multiple different sites (Inktomi, Hotbot, Altavista, etc.) in the pre-Google days.” – data scientist, 20 YOE

2. State of AI tooling in 2024: opinions

We asked readers for your views on the current state of AI tooling, and the replies span the spectrum from positive to negative. Interestingly, the split is roughly equal between readers on this question:

Let’s dive into each group, starting with the upbeat.

Moderately positive views

The most commonly-mentioned benefits of AI tools:

“Useful.” Lots of respondents say AI tooling is useful, but not particularly groundbreaking in its current form. A few quotes:

“Copilot is generally useful and can save a lot of time. Code completion systems have been around for years and this seems a natural evolution, if not a big step up. As an "old timer," I'm always a bit skeptical, but so far it's been a fairly good augmentation for my team.“ – Director of Engineering, 30 YOE

“It saves me half the time on a task, for about 10% of my tasks. I hope it improves significantly, and I am not worried about my job for the next 5 years." – software engineer, 17 YOE

“It works well, as the name implies, as a copilot. I feel it's sometimes quicker to ask a question than trying to hunt down an answer on Google, especially since copilot has some context from the workspace. It's also useful as an initial PR, even if I don't agree with or implement all its suggestions. For code generation, I found it more useful for writing unit tests than actual business logic.” – software engineer, 4 YOE

“It's a decent autocomplete. It’s good at answering simple questions when I'm working with a new language, and has basically replaced Stackoverflow for me. My expectations are that it will only get a little more powerful, and still only be useful for learning or simple completion, but the big value add will come from training on our specific codebase to enable things like generating the entire boilerplate for a test.” – software engineer, 13 YOE

“It’s super, super useful day to day, to speed up routine tasks and help with the empty whiteboard problem“ – principal engineer, 15 YOE

“It's pretty good. I give it 6 out of 10” – software engineer, 2 YOE

A software engineer with 7 years of experience gives their top uses for GitHub Copilot:

“Explain this to me” feature (most useful)

Chatbot integrating with company-internal documentation (useful)

Code generation (helpful if you know what to look for)

Autocomplete (on a par with older autosuggest tools, perhaps a bit more capable)

“A solid choice for prototyping and getting started.” Several respondents distrust the outputs of AI, but see its usefulness as a kickstarter:

Building a prototype for testing things

Putting together proof-of-concepts

Generating boilerplate code or scaffolding for a project

Getting started on a new project

Understanding a new codebase

A sounding board for questions

Several experienced engineers mention large time savings from using AI tools for prototyping, and building first proof of concepts which need not be production-ready, secure, or even correct. Quick and dirty, done fast; that’s the goal! AI tooling is helping greatly with this, says the survey.

“Good for learning and research.” Many people note these strengths:

Good at searching for relevant coding-related information

Helpful for explaining coding concepts, syntax, and use cases

Good for learning about technical and non-technical domains. A senior engineer uses it to learn about functional programming concepts and accounting, and says they learn faster than with textbooks

Helpful for looking up information on technologies and open source frameworks/libraries which the tool was trained on, or can access

“A great interactive rubber ducking / pair-programmer tool” Talking with the tool to get unstuck is mentioned by many devs. “Rubber ducking” is a problem-solving method of describing a problem to a real or metaphorical rubber duck; the idea is that vocalizing and explaining it out loud helps lead to a solution.

Talking to an AI tool is different because it responds, unlike the duck. It can raise overlooked pointers and ideas. A staff engineer with 15 YOE calls it an “interactive pair programmer.” The same engineer also shares that it’s less helpful with anything more than simple pieces of code.

“I can see it evolving further.” Several respondents say there’s plenty of room for improvement. More integration touchpoints is an area which a CTO with 20 YOE hopes to see more progress in:

“Currently it can be a good pairing buddy for common languages/frameworks that don't change too often. In future, would be great to see some more integrations - i.e. suggestions for code improvements from IDE, ability to dive into projects and provide feedback on alternative approach from design perspective, tests auto-generation based on specs and existing codebase"

“Still learning how to use it.” It takes time and experimentation to figure things out. Software engineer Simon Willison (25+ YOE, the creator of the Django framework) has publicly said it took him quite a while to learn how to use GitHub Copilot productively. Meanwhile, a backend developer with 6 YOE shares that they are doing the same:

“I am not intimidated by AI technologies. On the contrary, I am trying to incorporate such tools into my work as much as possible. I feel I should invest more time in learning how to use AI tools. The generation of tests could potentially be improved. Current tools such as Copilot and CodiumAI have limitations for load context and do not often utilize existing fixtures by Pytest. I still write complex business logic manually, and often make corrections by hand because the AI cannot do it, or I’m too lazy to debug the prompt."

Very positive views

“Translating” between programming languages works great. Several developers share that a useful use case is giving the AI tool code in one language to be translated into another. Thanks to the architecture of LLMs, they translate well between programming languages, and also human languages. An engineering manager (25 YOE) says:

“It’s great for taking an idea prototyped in one language (bash), and translating it into another language (python). I can run both programs and assume that they return the same output.”

“It’s great!” Plenty of responses are strongly positive. Here are some from the survey about Copilot and ChatGPT:

Works very well on languages less used by developers

An “invaluable autocomplete+++,” as shared by a senior software engineer, 10 YOE

“Understands the codebase and quickly catches up to its style” - software architect, 25 YOE

Helps figure out issues with toolchain and development environments - senior engineer, 20 YOE

Wonderful for generating unit tests for classes - staff engineer, 20 YOE

A chat AI assistant is the first recourse when stuck on a problem - senior software engineer, 7 YOE

“Game changer.” A sizable number of responses are bullish about the productivity gains of the tech today. The quotes below come from professionals with at least 10 YOE, and plenty of hands-on experience:

“GitHub Copilot has been a game changer for day-to-day productivity in our company. GPT-4 is also widely used for documentation, meeting notes, and requirements gathering. I assume it will only get better, and become more effective at reducing toil and helping teams produce better software, particularly with the non-core-coding tasks (documentation, unit tests, sample code and starter kits, templates, etc).” – staff engineer, 30 YOE

“It’s an enormous productivity boost, even with existing tools. I expect it to improve over time, though not necessarily by orders of magnitude.” – principal engineer, 25 YOE

“It’s essential for any modern engineer to have AI tooling in their stack. Otherwise, it’s a massive productivity hit.” – engineering team lead, 12 YOE

“Game changer. Makes writing test cases really easy. Shifts most of the coding I do to thinking/planning from writing. I love using it to write security policies, too!” — Director of security engineering, 12 YOE

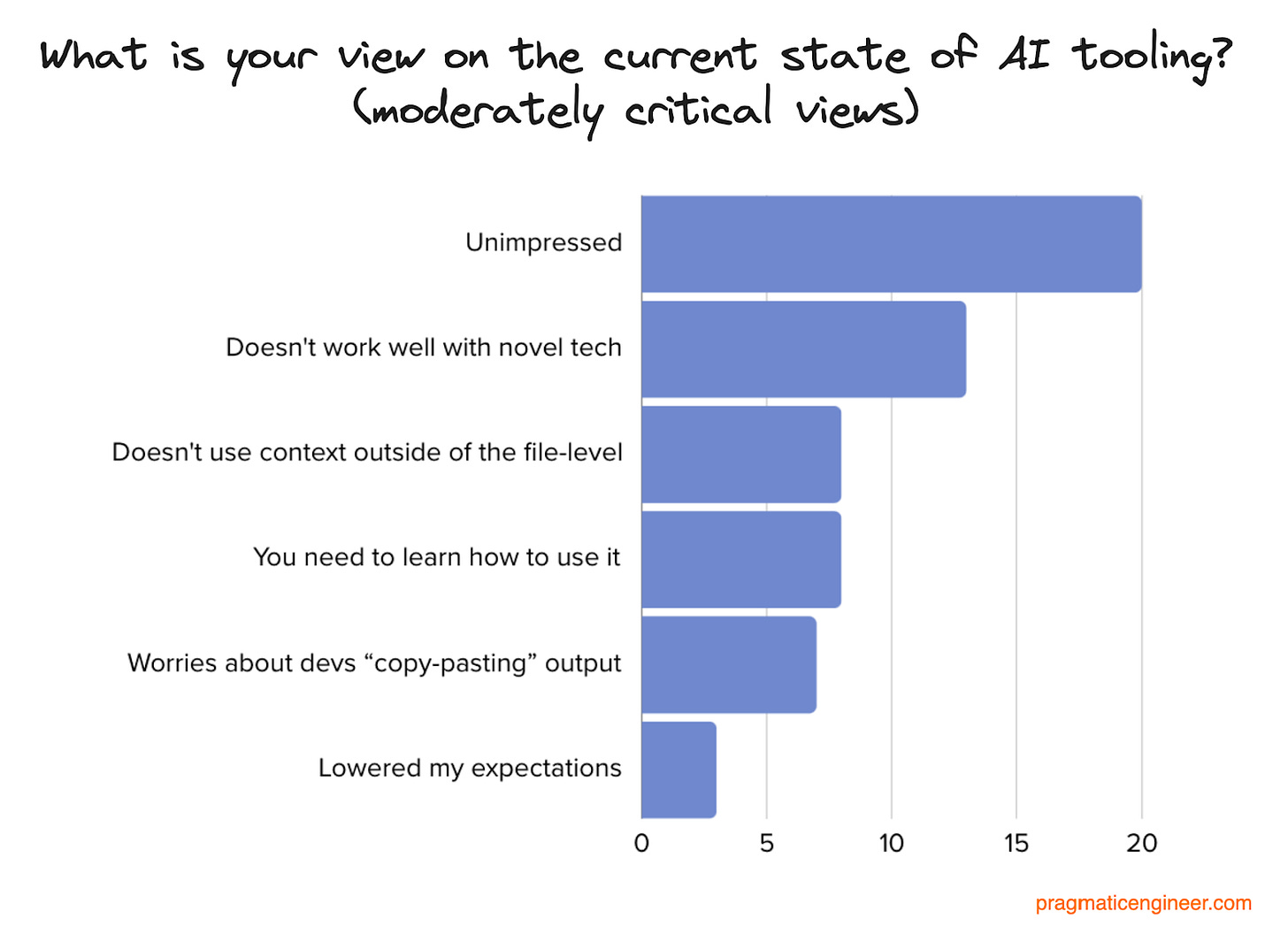

3. Critiques of AI tools

“Unimpressed”

The single most-cited criticism is that AI tools are not living up to their promise. Complaints from active users included:

Stagnation. The top gripe. LLM technology hasn’t changed in more than a year, since the release of ChatGPT 4.0, and it’s not getting better. Approaches like RAG can only bring so much improvement, according to a software architect of 15 years, and one with 20 YOE.

GitHub Copilot is the “only” tool that works. Several people say Copilot is the only tool they’re not entirely dissatisfied with, after testing several. However, these developers add that Copilot gets suggestions wrong as often as it’s right, and that unless you’re in a rush, it’s better to write code without automated suggestions.

Useful for simple stuff, poor at more complex tasks. It’s good at speeding up simple-enough refactoring, tedious use cases and “routine” tasks like config file changes, and working with regular expressions. But it doesn’t help much when working across a complex codebase, especially one that follows less common coding or architecture patterns.

Limited use cases. Outside of repetitive changes and boilerplate generation it’s not useful because it introduces hard-to-spot bugs.

Too much effort. Outside of code suggestions, it’s a lot of effort to get something complex that works as expected.

Unreliable. You need to stay on your toes because just when you start to trust it, it generates non-working, buggy code.

A “fancy autocomplete.” This is the view of an engineering team whose members have used the tool for around 6 months. Hallucinations are why it’s not trusted more.

More hindrance than help. A staff engineer with 10 YOE is correcting AI output more than benefiting from it. They expect things to improve, but see little value in the tooling as it stands.

Tedious. It’s more work to bulk-generate tests, and tools can stumble when faced with untypical software architecture. A software developer (9 YOE) expects things to improve when the tool can “digest” complete projects.

“Even the boilerplate is not what I need.” A senior software engineer with 5 YOE voices disappointment that instructed boilerplate code ended up doing the opposite of what was intended. For context, this engineer is just getting started on AI tools; perhaps working on prompts will improve outputs.

Concerns about engineers copy-pasting AI output

Half a dozen engineers with more than 10 years of experience voiced the concern that less experienced colleagues copy-paste code output from AI tools, without knowing what it will actually do. Seasoned developers list these gripes about:

Forgetting that AI-generated code is unverified. “Engineers copy-pasting code is nothing new, it’s existed since the StackOverflow days. However, StackOverflow has a human touch and verification; AI has neither.” (principal engineer, 11 YOE).

Trusted as a source of truth, but isn’t one. “It works well if you have enough knowledge to understand the output and to challenge it. It’s very dangerous for junior devs as they tend to use it as a source of truth, which it is not, at least not today.” (software engineer, 6 YOE)

Doesn’t truly help newer devs: “These tools are directionally helpful for seniors who can identify errors, but detrimental to juniors who won't know the difference.” (AI practice lead, 17 YOE)

Degrades people’s problem-solving skills. “I worry the younger generation will become dependent on LLMs, and that their own critical thinking and problem-solving skills will diminish.” (DevOps engineer, 8 YOE) I’d add that older generations tend to underestimate how the next generation adapts. Our recent GenZ survey in this publication showed the new generation of talent is very capable; so we might need to worry less!

Fair Criticism related to the architecture of LLMs

The below criticism is about the inevitable nature of LLMs, thanks to their architecture. LLMs are trained on a large corpus of textual training data, and then generate the next most likely token based on an output. Thanks to this architecture, they will perform worse when encountering scenarios that were not part of their training data, and are prone to hallucination. We previously covered How LLMs like ChatGPT works – as shared by the ChatGPT team.

“Doesn’t work well on custom tech stacks and novel architectures.” Experienced engineers say that current tools can’t get context on an entire code repository, or on all projects within a company. This lack of context gets in the way of helpful output for projects with custom code structures and coding approaches.

Here’s the problem according to a staff engineer (30 YOE):

“The killer features for us would be a broader understanding of our ecosystem of APIs and libraries, to recommend common standard approaches within our company when writing new code or building new products.”

This criticism makes sense given how LLMs are trained; most training data is open-sourced code. At the same time, technology used at larger companies is often home-grown, and so these tools need additional context to work optimally. This context could be provided via RAG (we covered more on RAG.) We also previously covered how LLMs work and are trained.

“Doesn’t use context beyond file-level.” A valid criticism is that current LLM tools focus on the file level, not the project level. Several developers voice hope that AI tooling shall be reimagined to work at project-level, and that AI assistants will get additional context to the single open file.

A software developer using these AI tools for more than two years says:

“There is not yet a good tool with the speed, quality and developer experience of GH copilot, which is also aware of the full project context (types, interfaces, overall patterns, file structure,) and that we can ask questions to without leaving the IDE. I’ve tried GH Copilot X, which is an attempt at this, but it's definitely not there yet and has been a net negative.

I would pay for a tool that solves this problem smoothly. At the same time, I wouldn't conform with anything less than GH Copilot in terms of speed and quality of its autocomplete.”

And a principal engineer with 30 years of experience puts it like this:

“Copilot is good at very small, self contained tasks, but those are relatively rare. It’s bad at anything that needs broad context across the codebase.”

“Need to learn how to use it.” Several developers share that they had to invest a lot of time and effort in getting the tool to be useful. Some observations:

You need to guide it, akin to helping an inexperienced engineer (staff engineer, 8 YOE)

It needs a lot of handholding and domain expertise to make it useful (software engineer, 9 YOE)

It can be hard to ask the “right” question, and sometimes it’s easier to just skip it (software engineer, 13 YOE)

You need to have specific expectations, e.g. when generating tests, or how to have it work with data (senior software engineer, 25 YOE)

Hit and miss. “Sometimes it's like it's reading my mind, sometimes it's just so totally wrong it outweighs any benefits.” (SRE, 15 YOE)

A staff engineer with 25 years YOE gives an excellent summary:

“It's extremely useful if you understand its limitations. I get the best results when I use it to fill in the blanks.

Asking it to write a whole class, or an entire test file is dangerous because it'll give you something that looks right. However, when you dig in, there’s all kinds of things that just won't work. [But] it's gotten noticeably better since the early days.

I trust it enough now that if I lay out the code – set up the class name, important method name, and parameters – then I can just kinda tab through the details while double checking each chunk it gives me. Having good accompanying tests is also important.”

“Hallucination still a problem.” Several engineers raise this ongoing issue. Here’s a solutions architect with 15 YOE’s take:

“Sometimes it hallucinates methods that don’t exist. I’ve used trials of several services, and haven’t seen long-term improvement in any one."

“Doesn’t work well for brainstorming.” A systems engineer finds AI tools are a poor choice for brainstorming because they can generate lots of ideas, but its detailed descriptions legitimize bad ideas! Also, the tool cannot tell what’s useful for a business.

“Lowered my expectations as a less experienced developer.” A few devs share that over time AI tools seem to have gotten less reliable, or to not work as hoped. Interestingly, this observation comes from developers with less than 3 years of experience.

Perhaps this could be a case of the tech being at a point where it’s hard to judge when the output is not what’s needed. Or is it that more experienced developers come to every new tool with low expectations because they know that reality hardly ever lives up to hype?