New trend: programming by kicking off parallel AI agents

More devs are experimenting with kicking off coding agents in parallel. Also: comparing interviews at Meta, Amazon, Uber, and 5 other large tech companies

Hi, this is Gergely with a bonus, free issue of the Pragmatic Engineer Newsletter. In every issue, I cover Big Tech and startups through the lens of senior engineers and engineering leaders. Today, we cover two topics from The Pulse #149. Full subscribers received the below article two weeks ago. To get articles like this in your inbox, every week, subscribe here.

With agentic command line interfaces like Claude Code, OpenAI Codex, Cursor, and many others going mainstream, I’m seeing a trend of more software engineers experimenting with kicking off work with several agents simultaneously on separate tasks:

I talked with Anthropic engineer Sid Bidasaria about how Claude Code is built, and at the end of our conversation, he mentioned that he’d had a few agents running throughout and that it made him more productive with work. Similarly, software engineer Simon Willison, whom I consider an AI engineering expert, has posted about “embracing the parallel coding agent lifestyle.” He writes:

“For a while now, I’ve been hearing from engineers who run multiple coding agents at once—firing up several Claude Code or OpenAI Codex instances at the same time, sometimes in the same repo, sometimes against multiple checkouts or git worktrees.

I was pretty skeptical about this at first. AI-generated code needs to be reviewed, which means the natural bottleneck on all of this is how fast I can review the results. It’s tough keeping up with just a single LLM given how fast they can churn things out, where’s the benefit from running more than one at a time if it just leaves me further behind?

Despite my misgivings, over the past few weeks I’ve noticed myself quietly starting to embrace the parallel coding agent lifestyle.

I can only focus on reviewing and landing one significant change at a time, but I’m finding an increasing number of tasks that can still be fired off in parallel without adding too much cognitive overhead to my primary work.”

Simon shares advice about what works for him, with research, maintenance tasks, and directed work all mentioned as use cases.

It’s interesting to consider whether parallel work with agents has the potential to overturn decades of software engineering practices. Let’s assume software engineers who kick off multiple agents at once do become more productive than “single-threaded” peers who work on one problem at a time. If so, then this practice has a chance to spread, should enough software engineers seek to be more productive – or want to avoid being left behind by some colleagues doing more than before.

But engineering in the pre-AI era was all about being in the flow for many productive engineers. A flow state goes something like this:

Understand the moving parts

Build a solution, validate it, iterate on it

When satisfied with how it works, submit a pull request for code review — or, if no review is needed, just merge and ship it

Interrupting this process disrupts the flow state, and it takes time to get back into it: it’s why software engineers tend to prioritize focus time, to make progress with coding work.

Of course, this isn’t universal among all highly productive engineers; when I was an engineering manager, the most productive engineers on my team did a lot of context switching and were adept at juggling several things at once. Here’s an average-looking day for a senior engineer acting as a tech lead:

Code reviews. Arrive at office, go through open code reviews from the previous night

Coding. Get some of their own coding work done

Standup. The usual

More coding. Get the work done. At least, that’s the idea. In reality:

Interruptions: code reviews, requests for help, taps on shoulder. The most productive engineer on a team regularly gets messages requesting code reviews to unblock teammates, or to help someone else who’s stuck, or the manager (me – sorry!) tapping them on the shoulder for help with something.

I wonder if senior+ engineers will be “naturals” at working with parallel AI agents, based on their existing habits and what they do currently:

Keep parallel workflows in their heads; e.g., what team members are doing at any one time.

Code reviews across several workstreams: they’re the go-to code reviewer, and usually review all code changes across 2-5 workstreams. They may not do the work, but know when it’s correct.

Can handle interruptions: they’ve learned how to make progress when their focus is continually being broken.

Good at directing colleagues: because they’re regularly interrupted, they’ve also learned how to delegate and explain urgent work to team members.

Writing skill: these engineers write a lot of code reviews, draw up documents like RFCs that outline work, create tickets to break down projects, and critique colleagues’ efforts; all this involves communicating effectively in writing.

With AI agents, the qualities that make a good tech lead are within reach for engineers who want to be more productive. So far, the only people I’ve heard are using parallel agents successfully are senior+ engineers.

Then again, this workflow hasn’t stuck with everyone: I asked Flask creator Armin Ronacher about his experience with parallel agents. He told me:

“I sometimes kick off parallel agents, but not as much as I used to do.

The thing is: it’s only so much my mind can review!”

But we’re in new territory now that any dev can kick off parallel coding with coding agents. Will it make engineers more productive, or will it just make people feel like they’re more productive? Perhaps engineers who do one thing at a time and keep focus will be shown to produce more reliable software, over time. Or maybe it’ll turn out that working with parallel agents leads to more issues slipping through and more iterations, which destroys any gains.

We will find out. Personally, I can only see more devs experimenting with parallel agents.

My sense is that software engineering basics matter more when working with AI agents. I’ve started to use AI agents for my own side projects, with success so far. I do a few things:

Testing: all side projects have unit tests because I learned to not trust my own work without validation

Small, descriptive tasks: I give tasks small enough in scope, which I explain, and give examples of

Refactoring: every third or fourth task is for the agent to refactor some code they wrote (e.g., extract into a method, move to a new class)

Review: I track what the agent does

Do small things personally: I keep my IDE open and do anything that’s a few lines to change by hand, so I stay aware of the codebase

I keep hearing the same from other engineers: “mandating” engineering practices like having the agent pass all tests before continuing, leads to better results. This is unsurprising and it’s why these practices are getting popular. AI agents are non-deterministic and to some extent unreliable; these practices make them a lot more reliable and usable.

Comparing interviews at 8 large tech companies

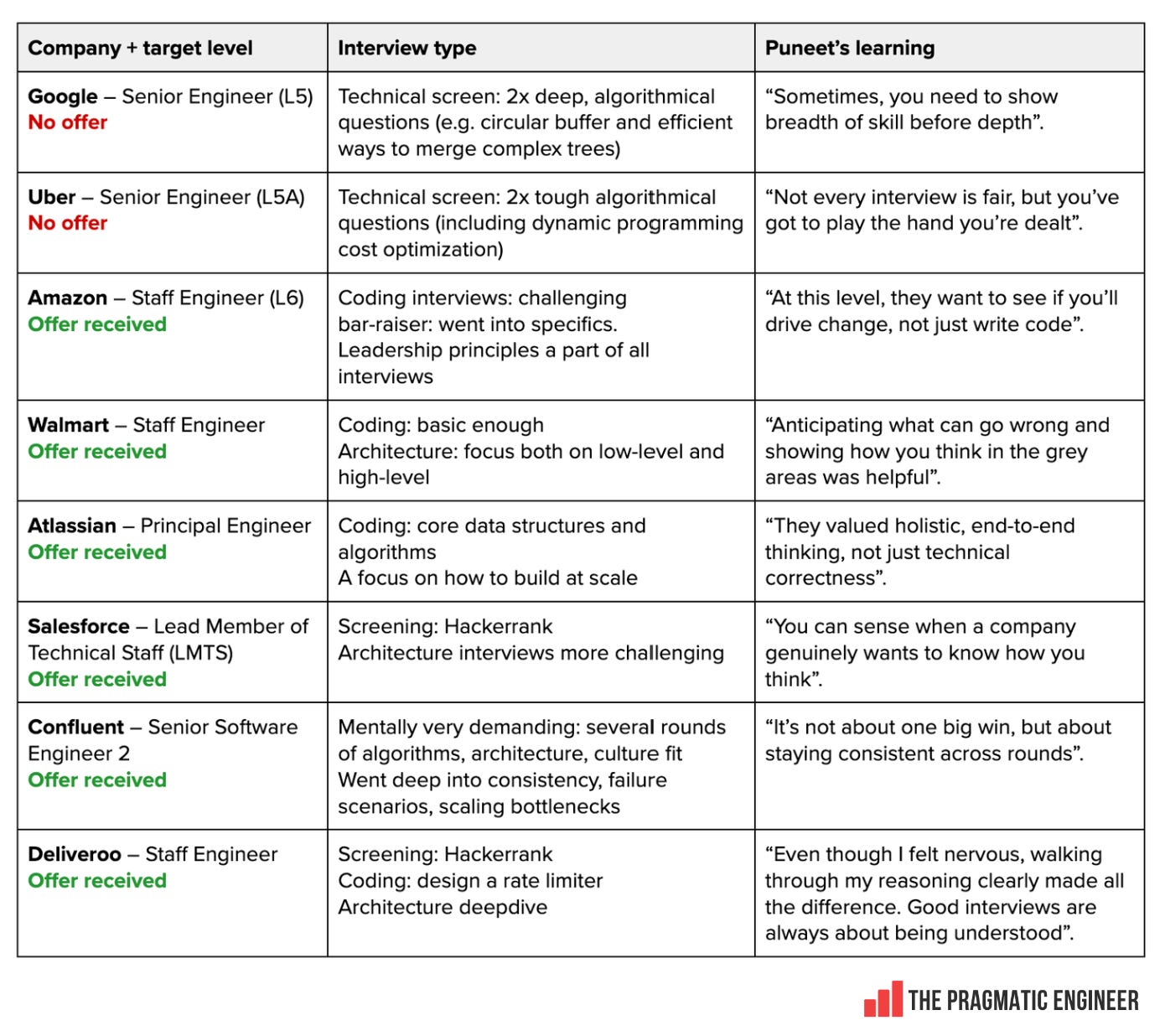

Puneet Patwari recently accepted an offer to join Atlassian as a Principal Software Engineer. In three months, he did more than 60 interviews at 11 companies, he told me – while dropping out of 3 more interview processes after accepting the Atlassian offer, including that of Meta. Following that endeavour, he has compared the interview processes of the largest companies:

A few more observations that Puneet shared with me:

Amazon: the Amazon Hiring Manager round was one of the most unique I ever experienced. We got so engrossed in the discussion that it took 160 minutes instead of the scheduled 60 minutes! We had to take a break in between the interview process.

Atlassian: The leadership craft (LC) & values were two interview rounds which were very crucial in determining that I’ll be levelled at the Principal level. Of course, the Systems Design interview was also key here. Atlassian puts a lot of emphasis on LC for Principal engineers.

Salesforce: the system design round was based on the actual job requirement. It was a migration problem where the interviewer wanted to check if I can own a project end-to-end with customers at the centre of it.

Confluent: when I say it was the most mentally demanding interview, what I mean is how every skill was tested with two interviews! So 2x data structures and algorithms (DSA), 2x System Design 2x behavioural interview rounds.

I cannot stress enough how important behavioural interviews are at the Staff+ levels. Doing well on these interviews were decisive in getting Staff and Principal-level offers. Of course, you needed to do well on coding and systems design: but my sense was that the behavioural parts were make or break for levelling and getting an offer.

A few things stand out to me from Puneet’s account of his interviews at leading tech companies:

Algorithmical coding interviews are everywhere! For senior+ positions, you need to get really good at these, including challenging topics like dynamic programming. We cover how to perform well in these in the article, How experienced engineers get unstuck in coding interviews

Interviews are tough, and time consuming. Even after Puneet had offers, no company shortened their process. Puneet had to decline 3 more interviews – including one at Meta – because by the time the interviews would have come around, he already had an offer he had accepted at Atlassian.

In a tough job market, “top” candidates are still in demand. We’ve covered how challenging the current tech labor market is for jobseekers, but Puneet interviewed at 11 companies and got 6 offers. His applications had to have a lot going for them in order to pass the resume screenings: 10+ years of experience, and working as a Senior Software Engineer at Microsoft. He also showed up really well prepared.

Bad luck can strike at any time. Puneet’s interview experience at Uber seems to have been a bit unlucky: the interviewer presented as rigid and not open to dialogue. Perhaps they were having a tough day, or wanted to get the interview over with. Or it could be what Steve Yegge describes as the interviewer anti-loop

Congrats to Puneet for accepting the Atlassian position, and thanks for sharing all these learnings!

These were two out of the five topics covered in The Pulse #149. The full edition additionally covers:

ACP protocol. A new protocol built by the Zed team, which tries to make it easier to build AI tooling for IDEs than the MCP protocol allows

AI security tooling works surprisingly well? AI-powered security tools seem good at identifying security flaws in mature open source projects

Is AI the only engine of US economic growth? Forty percent of US GDP this year is based on AI-related spend, while 60% of venture capital goes into AI. Hopefully, it won’t end up as a bubble which bursts like in 2001

Read the full issue here, and check out today’s The Pulse here.